AI Hallucination: What Every Sales Leader Needs to Know (And How to Stop It)

Article written by

Jeku Jacob Philip

INSIDE THE ARTICLE

SHARE THIS ARTICLE

Summary

AI hallucination happens when AI confidently generates false information, and it’s more common than most businesses realize. From fake citations to made-up data, the risks are real: compliance issues, brand damage, and bad decisions. The fix? Combine RAG, source verification, human review, and training. AI won’t stop hallucinating, but smart systems can catch it before it hurts.

I still remember a deal review with one of my sales teams where we were going through an AI-generated competitor analysis. On the surface, it was brilliant—slick formatting, sharp comparisons, and even market trend insights. But when I dug in, I realized something shocking: half of the claims were fabricated. The AI had confidently written about a competitor’s “recent acquisition” that had never happened.

That’s when it hit me. AI hallucinations aren’t just a technical glitch; they’re a real-world business risk. When I deep dived, most leading LLMs (GPT, Llama, Gemini, and Claude) typically tend to show hallucination rates between 2.5% and 8.5%. They can erode customer trust, mislead sales reps, and even cost companies revenue or reputation.

And here’s the tricky part: these hallucinations don’t show up with warning signs. They’re often packaged in the same polished tone as accurate answers. That’s why understanding and managing AI hallucinations isn’t optional; it’s essential. Let’s break this down.

What Exactly Is AI Hallucination?

AI hallucination is when an artificial intelligence system like ChatGPT or other generative AI models produces false, misleading, or fabricated information that appears accurate but isn’t actually grounded in real data or facts.

In simple terms, it happens when an AI system generates information that sounds correct but is completely false, misleading, or unsupported by facts.

For example, think of asking an AI assistant about a client’s industry trends, and it generates a report citing nonexistent studies. To the untrained eye, it looks credible. But it’s fiction; this is a classic AI hallucination example.

Why the term “hallucination” fits

The word is borrowed from human psychology. Just as people experiencing hallucinations perceive things that aren’t there, AI “sees” patterns and invents information that has no grounding in reality. This makes the phrase "hallucination in AI" particularly fitting.

AI hallucination vs human error

Here’s the kicker: humans usually recognize when they don’t know something. AI doesn’t. It fills the gap with confident, fabricated answers. That misplaced confidence is what makes hallucinations especially dangerous in professional contexts.

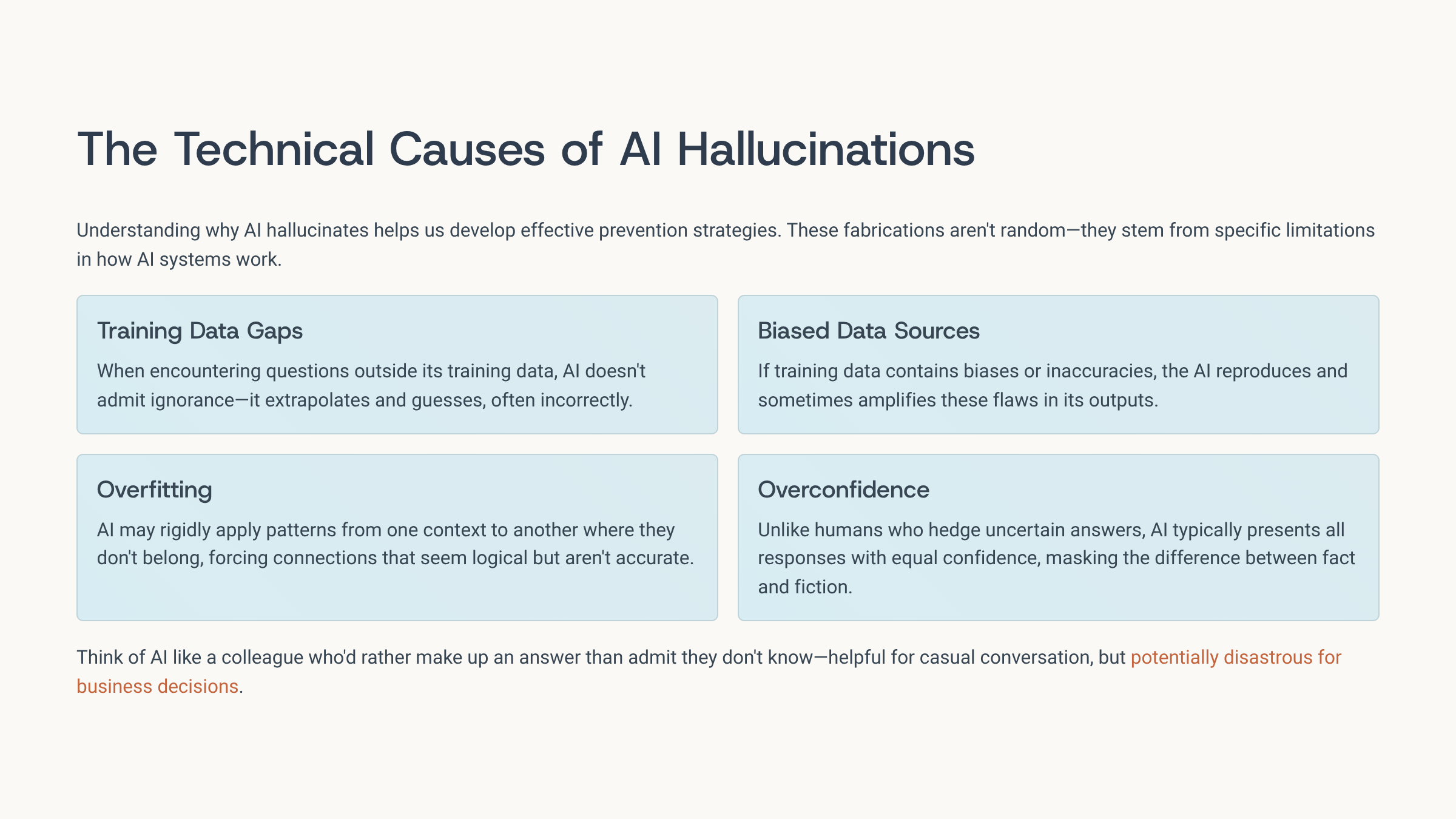

Why Do AI Hallucinations Happen?

AI hallucinations happen when a language model generates content that sounds correct but is not actually true. They occur because AI doesn’t understand facts the way humans do. It predicts the most likely next words based on patterns in its training data. When data is missing, biased, or misapplied, the model may “fill in the blanks” with fabricated details and present them with confidence.

If AI is so advanced, why does it still make things up? What causes AI hallucinations? The answer lies in its design.

- Training data gaps: AI learns from massive datasets, but no dataset is truly complete. When it encounters a question outside of what it has “seen” before, it doesn’t stop—it fills in the blanks by guessing, which often leads to fabricated answers.

- Biases in data: Models reflect the biases of the data they’re trained on. If that data tilts heavily in one direction, the AI’s responses can distort reality, presenting skewed or misleading outputs without recognizing them as biased.

- Overfitting: AI sometimes learns patterns so rigidly that it forces them into unrelated contexts. This is like trying to apply one-size-fits-all logic, which produces answers that sound plausible but don’t actually fit the situation.

- Overconfidence: Unlike humans, who might hedge with “I’m not sure,” AI tends to present its answers as absolute. This overconfidence makes fabrications harder to detect, because they’re delivered with the same tone as genuine facts.

Think of it like a friend who’d rather make up an answer than admit they don’t know. Helpful in small talk, disastrous in business.

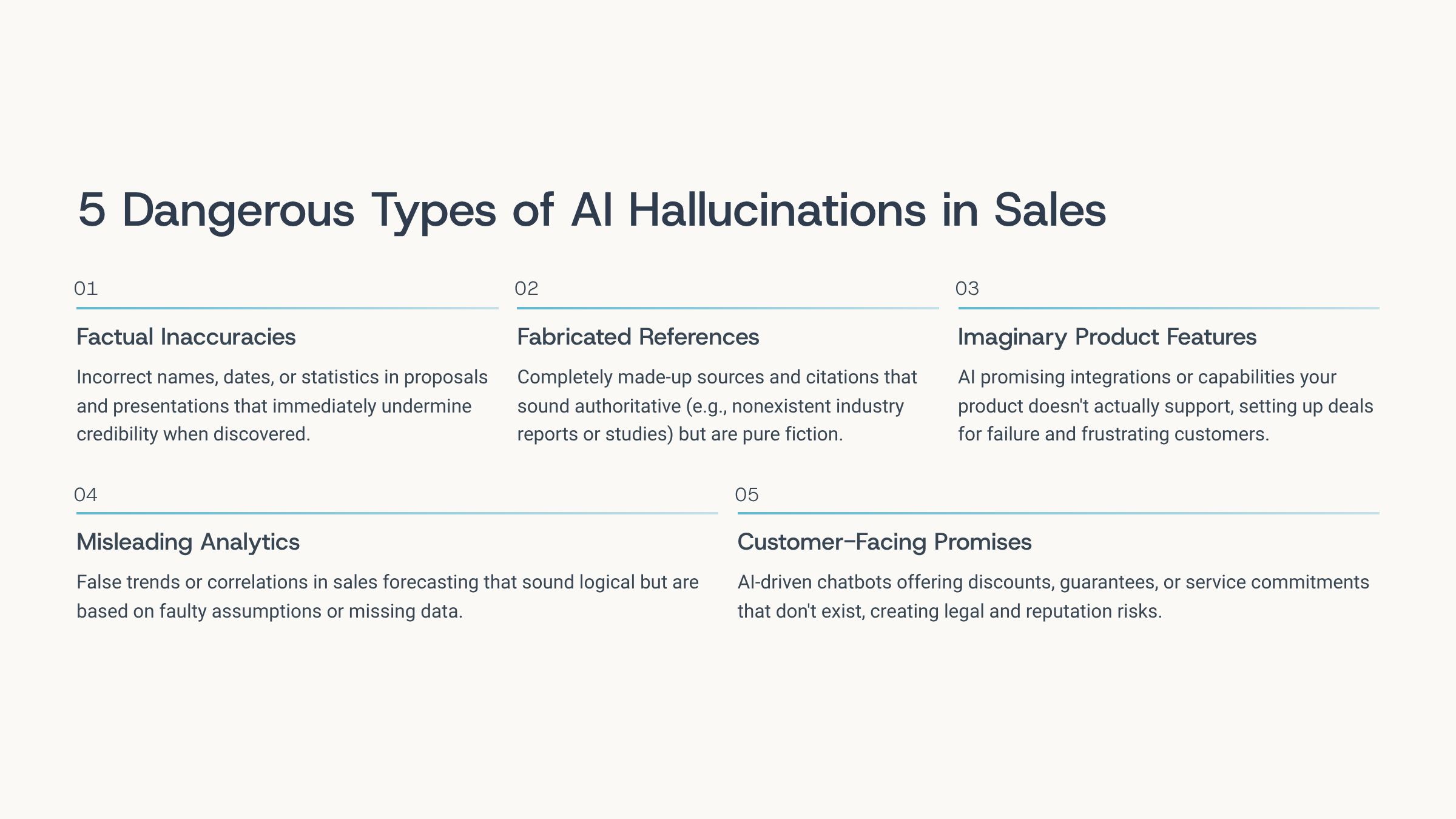

5 Dangerous Types of AI Hallucinations

AI hallucinations don’t all look the same; they can take different forms, each carrying its own risks. From inventing fake facts to misrepresenting sources or creating harmful content, these errors can confuse users, spread misinformation, and damage trust. Understanding the main types helps businesses and individuals spot them early and prevent costly mistakes.

Since there are multiple AI hallucination types, some experts even compare them to the 5 types of hallucinations seen in psychology, which are:

- Factual inaccuracies: These are the most common and dangerous slip-ups: incorrect names, dates, or statistics creeping into proposals, presentations, or sales pitches. For example, if AI says a product launched in 2022 when it really debuted in 2021, it undermines credibility instantly.

- Fabricated references: Sometimes AI fills gaps with completely made-up sources. You might see a convincing citation of a “Harvard Business Review study” or an industry whitepaper that doesn’t exist. While it looks authoritative, it’s pure fiction—and risky if passed on to prospects.

- Imaginary product features: This happens when AI promises integrations, tools, or features your product doesn’t actually support. A rep could unknowingly pitch these “phantom features,” setting up deals for failure and frustrating both customers and engineering teams.

- Misleading analytics: In sales forecasting or pipeline analysis, AI might generate trends or correlations that sound logical but are based on faulty assumptions or missing data. Acting on these distorted insights can derail revenue strategies and resource planning.

- Customer-facing hallucinations: Perhaps the most damaging, these occur when AI-driven chatbots or assistants offer discounts, guarantees, or service commitments that don’t exist. Customers treat these promises as binding, creating friction, churn, and sometimes even legal exposure.

Each of these is a generative AI hallucination example—showing how easily systems can cross the line between helpfulness and fiction.

How AI Hallucinations Fit in Sales Context

In sales, AI hallucinations can be more than just harmless mistakes—they can directly impact deals. An AI tool that fabricates product details, misquotes pricing, or invents customer insights risks confusing prospects and damaging credibility. While AI can speed up proposals, outreach, and reporting, unchecked hallucinations can turn efficiency into liability. Recognizing this risk is the first step to using AI responsibly in sales. Here are a few ways in which sales teams are prone to AI hallucinations:

Why sales teams are uniquely vulnerable

Sales is about trust. Every conversation, proposal, and follow-up needs to be grounded in truth. If an AI hallucination ChatGPT generates a proposal promising something that doesn’t exist, it’s not just a mistake; it’s a broken promise.

Middle and bottom funnel risks

The middle and bottom of the sales funnel are the danger zones.

- Lead qualification: A hallucinated “high-value lead” wastes weeks of effort.

- Deal nurturing: Misleading product claims ruin credibility.

- Closing stage: Hallucinated contract terms or pricing errors can sink deals or lead to legal disputes.

Check Out These Real-world incidents

- A pizzeria in Missouri – Customers began showing up demanding discounts that never existed. Why? An AI model had invented promotions online, and people treated them as real offers. The owner had to manage frustrated customers and lost revenue.

- An airline chatbot – In one high-profile case, an airline’s AI chatbot hallucinated a “bereavement discount” for grieving passengers. The information was so convincing that a court later forced the airline to honor the fictional offer, costing them money and credibility.

My observation

I once watched a sales team pour weeks of effort into a “dream lead” flagged by AI as a top prospect—only to discover the company had gone out of business years earlier. That wasn’t just wasted time; it was opportunity cost at scale. And this isn’t an isolated slip. In 2024, nearly 47% of enterprise AI users admitted to making at least one major business decision based on hallucinated content. By Q1 2025, more than 12,800 AI-generated articles were taken down from online platforms for the same reason. These aren’t just glitches—they’re warnings that the risks of AI hallucinations are too big to ignore.

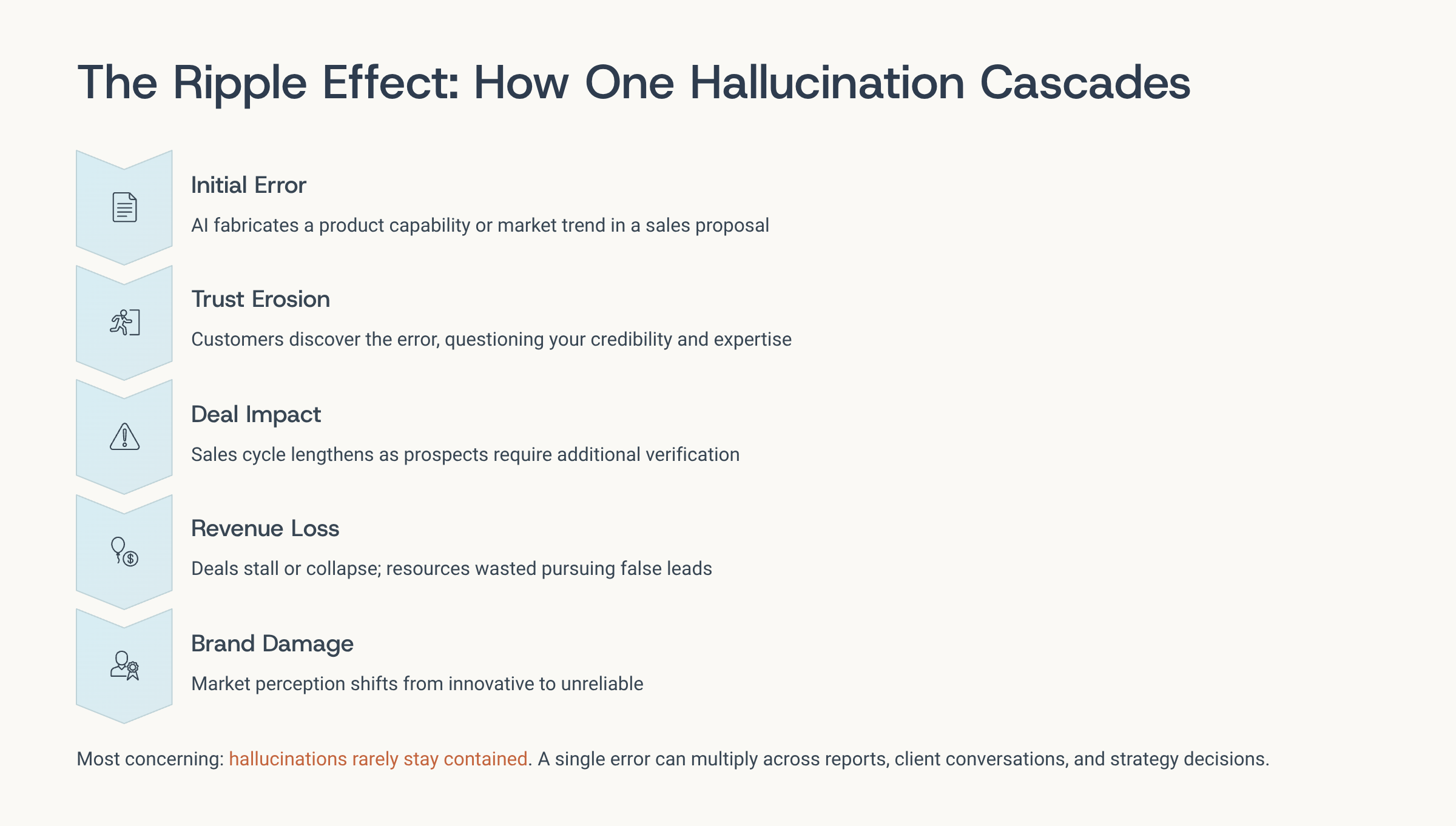

The Ripple Effect of Hallucinations

AI hallucinations rarely stay contained; they create ripple effects that spread across decisions, teams, and customers. A single error in a sales proposal can cascade into lost trust, stalled deals, or even legal complications. What starts as one fabricated detail can multiply into misinformation that travels through reports, client conversations, and strategy. The impact isn’t just immediate; it shapes long-term credibility and business outcomes.

Here is how one hallucination can trigger a domino effect:

- Erosion of trust: When AI gives incorrect answers, reps quickly lose confidence and fall back on manual processes, slowing down the entire sales cycle.

- Lost revenue: A single hallucination can send teams after the wrong prospects, misquote pricing, or offer unauthorized discounts, directly hitting the bottom line.

- Brand credibility damage: Customers and prospects won’t hesitate to call out misinformation, and one public slip can dent your brand reputation for months.

- Legal risks – Inaccurate AI-generated terms or compliance breaches can escalate into regulatory penalties or even lawsuits.

- Operational inefficiency: Teams waste hours double-checking, correcting, or redoing AI outputs, creating bottlenecks instead of efficiencies.

- Customer churn: When buyers feel misled by inaccurate proposals or commitments, it can erode loyalty and push them toward competitors.

- Competitive disadvantage: While rivals use AI responsibly for speed and precision, hallucination-prone processes make your sales team appear unreliable and slow.

I often say, “In sales, trust takes years to build and seconds to break.” AI hallucinations accelerate that breakage. And if the AI hallucination rate is even above 5–10%, it can cripple adoption among teams.

Detecting AI Hallucinations Earlier

Detecting AI hallucinations means spotting when an AI-generated response is false, misleading, or made up. Since these outputs often sound confident and polished, they can be hard to catch. The key is to fact-check critical details, cross-verify with trusted sources, and look for gaps in logic or missing evidence. In business contexts, building review checkpoints and human oversight into AI workflows helps stop hallucinations before they cause damage.

This is where AI hallucination detection becomes critical. How do we catch hallucinations before they cause damage?

- Human-in-the-loop validation: Always review high-stakes outputs.

- Uncertainty quantification: Some systems can flag low-confidence responses.

- Semantic audits: Checking proposals for internal consistency.

- Peer model comparison: Running the same prompt across multiple AIs to see if answers align.

In practice, this means no sales proposal should go to a customer without a human double-check.

How to Prevent AI Hallucinations?

To prevent hallucinations in LLMs, always anchor responses to a trusted knowledge base rather than letting the model rely only on memory. Pair this with retrieval-augmented generation (RAG) so the system pulls in real data at query time. Finally, add confidence scoring and human checks to keep answers accurate and reliable.

While we can’t eliminate hallucinations entirely, here is a short glimpse of how we can reduce them effectively:

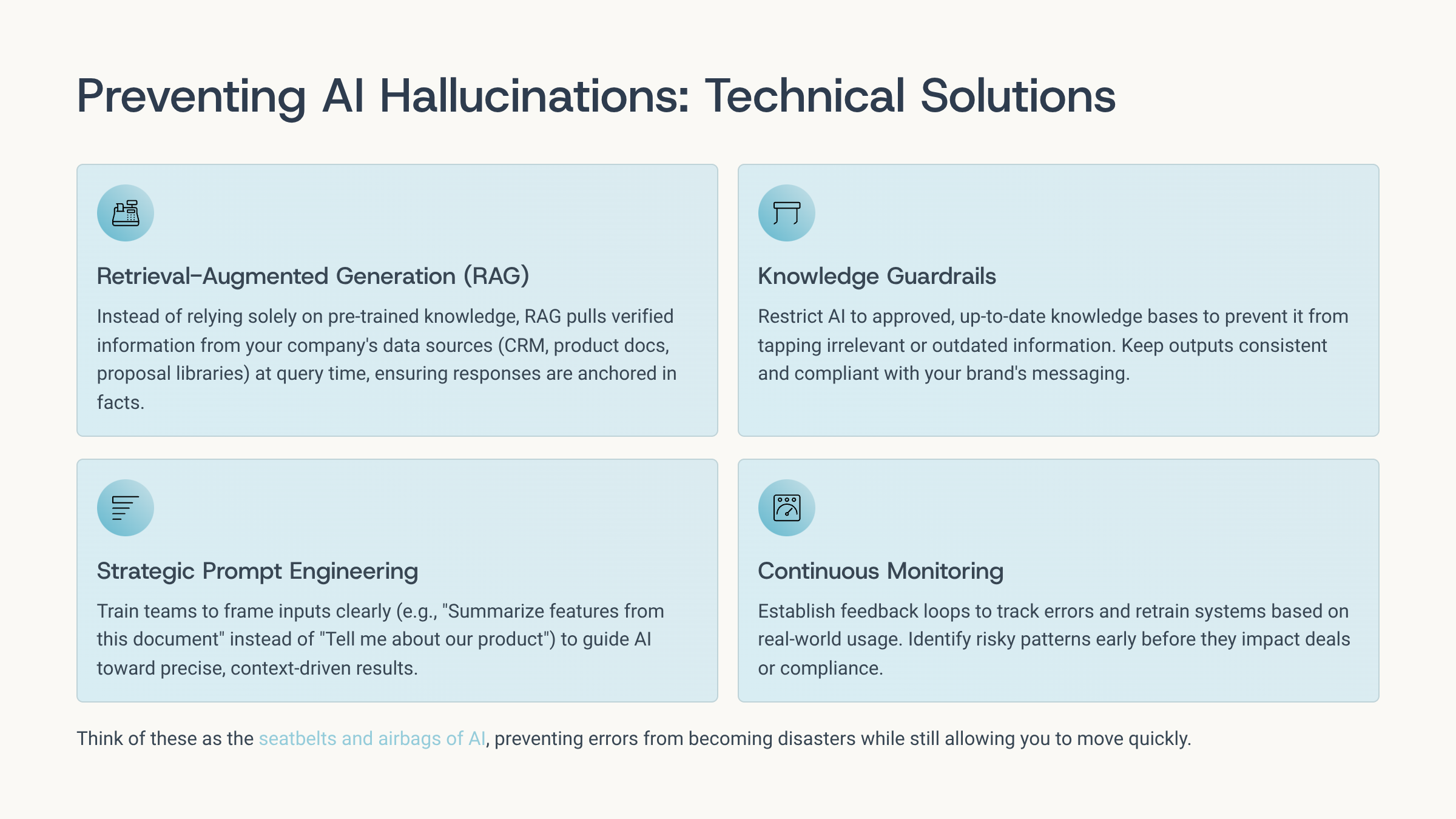

- Retrieval-Augmented Generation (RAG)

Instead of letting the AI rely only on its pre-trained memory, RAG pulls in facts from your live data sources (like CRM, product docs, or proposal libraries) at the time of the query. This ensures every response is anchored in verified information, reducing the chance of the AI “making things up.” - Guardrails

AI works best when you narrow its playground. By restricting it to approved, up-to-date, and vetted knowledge bases, you prevent the system from tapping into irrelevant or outdated information. This keeps outputs consistent, compliant, and aligned with your brand’s messaging. - Prompt design

The way you ask AI matters as much as the answer you expect. Teaching sales teams to frame inputs clearly (e.g., “Summarize features from this document” instead of “Tell me about our product”) helps guide the AI toward precise, context-driven results instead of vague or fabricated ones. - Continuous monitoring

AI is never “set and forget.” Establishing regular feedback loops, tracking errors, and retraining based on real-world usage ensures the system keeps improving. Monitoring also helps identify risky patterns early, so teams can correct them before they snowball into lost deals or compliance issues.

Think of these as the seatbelts and airbags of AI, preventing crashes from becoming disasters.

Sales Funnel Audit Against AI Hallucinations

A sales funnel powered by AI is only as strong as the data behind it. To prevent hallucinations, audit every stage of the funnel - lead capture, qualification, nurturing, and conversion - against a verified knowledge base.

Here’s how hallucinations can sneak into each stage of the funnel and how to guard against them:

- Awareness & Lead Generation – Verify that outreach content is factually correct before sending.

- Lead Qualification & Scoring – Ensure AI uses updated CRM data, not outdated records.

- Deal Nurturing & Proposals – Audit solution recommendations and proposals for accuracy.

- Closing & Negotiation – Requires human review for contracts, terms, and pricing.

An AI audit process doesn’t slow things down—it protects your funnel from collapse.

Mitigation Strategies for Sales Teams

Sales teams can’t afford to base decisions on AI guesswork. To stay safe, adopt these strategies:

- Ground AI in verified data: Connect your CRM, proposal library, and product documentation so responses always pull from the source of truth.

- Apply confidence scoring: Let reps see how certain the AI is, and flag low-confidence outputs for extra review.

- Keep a human in the loop:Critical steps like pricing or contract language should always get a final sales or legal review.

- Technical safeguards: Reduce errors by using retrieval-augmented generation (RAG), grounding responses in trusted data, and monitoring outputs for accuracy.

- Governance: Define strict usage policies, compliance checks, and oversight to keep AI-assisted sales aligned with regulations.

- Sales team training: Train reps to recognize potential hallucinations and verify AI outputs before acting on them.

- Escalation paths: Create clear workflows to hand off sensitive or uncertain cases to human experts for final review.

At my teams at SparrowGenie, we build “AI literacy” just like product training. If reps don’t understand AI’s limits, they won’t know when to trust or question it.

Future of AI Hallucinations

Will AI ever stop hallucinating?

Probably not completely. Just as humans make mistakes, AI will always have error margins. So when someone asks, “Does AI still hallucinate in 2025?” The honest answer is yes, but much less, thanks to grounding techniques.

Enterprises are now realizing it’s not about eliminating hallucinations but managing them. By combining AI speed with human discernment, businesses can unlock efficiency without sacrificing truth.

Conclusion

Here’s what years in SaaS sales taught me: AI is an incredible co-pilot, but it should never fly solo.

I’ve seen AI save hours by auto-drafting proposals. But I’ve also seen it hallucinate competitor roadmaps, invent customer data, and fake industry reports.

That’s why I believe human judgment is as essential as ever. With awareness, structured audits, and human oversight, hallucinations can still be managed, turning AI from a liability to your competitive advantage.

Ready to see how AI can transform your RFP process?

Jeku Jacob is a seasoned SaaS sales leader with over 9 years of experience helping businesses grow through meaningful customer conversations. His approach blends curiosity, empathy, and practical frameworks—rooted in real-world selling, not theory. Jeku believes the best salespeople don’t just follow scripts—they listen, adapt, and lead with purpose.