AI-Powered Sales Intelligence Tools: Cutting Through the Hype to Find What Works

Article written by

Kate Williams

INSIDE THE ARTICLE

SHARE THIS ARTICLE

Summary

AI in sales isn’t magic—it’s a multiplier. This post cuts through the hype to show what AI sales intelligence tools really deliver, what’s overhyped, and how to roll them out without burning cash. Includes real results, implementation tips, red flags to watch for, and a clear 30-day action plan. Because smart reps with AI? They win.

Your reps don’t need more tabs—they need timing. Between CRM fields, call notes, intent feeds, and Slack pings, sales feels like noise on full volume. AI-powered sales intelligence turns that noise into a short list: who’s in-market, what they care about, and the next best action. It’s not hype; it’s fewer guesses, faster meetings, and cleaner forecasts. Let's dive deeper into what AI-powered sales intelligence is, its significance, its benefits, its implementation strategy, and a lot more.

What is AI-Powered Sales Intelligence?

AI-powered sales intelligence merges first-party data (CRM, pipeline, product usage), third-party signals (firmographics, technographics, intent), and conversational/behavioral data (emails, calls, site events). Machine learning and LLMs then classify, score, summarize, and route those signals into your daily workflow—prioritizing accounts, predicting intent, and personalizing outreach at scale.

Think of it as a signal supply chain:

- Ingest: CRM, MAP, web analytics, calls, email threads, community chatter, and third-party data.

- Enrich & Normalize: De-dupe, unify identities, and map buying groups.

- Model & Score: Fit, intent, propensity, churn risk, next-best-action.

- Orchestrate: Surface insights in the tools your team already uses (Gmail/Outlook, CRM, SEP).

- Learn: Close-loop feedback—wins/losses tune the models.

Now, let’s see why your sales teams need it now more than ever before.

Why now? Because noise is beating most sales teams

Your buyers are drowning in content—and so are your reps. Inboxes, ads, webinars, Slack threads, call recordings, CRM fields, and a dozen “must-have” tools create a wall of sound. That leads to too many touches and not enough timing. AI-powered sales intelligence earns its keep by separating real buyer intent data from background chatter and turning it into predictive lead scoring and next-best actions your team can actually use.

- Attention is scarce: Buyers skim, self-educate, and go dark. If your message isn’t tied to a fresh signal, it’s ignored.

- Buying groups got bigger: One champion isn’t enough; legal, security, finance, and IT all weigh in. You need to see the whole committee early.

- Tools multiplied, context vanished: Data lives everywhere; insight lives nowhere. Sales intelligence tools must stitch sources and surface context in the rep’s flow (CRM, email, call prep).

- Personalization at “one-to-few,” not one-to-one: Generic copy dies. Signal-grounded messaging (page viewed, topic searched, stage behavior) wins.

- Forecasts need evidence, not optimism: Activity + intent + stage movement beats “gut feel” every quarter.

The teams winning today aren’t louder—they’re better timed. They use AI-powered sales intelligence to decide who, why, and what now—then show up with proof, not guesses.

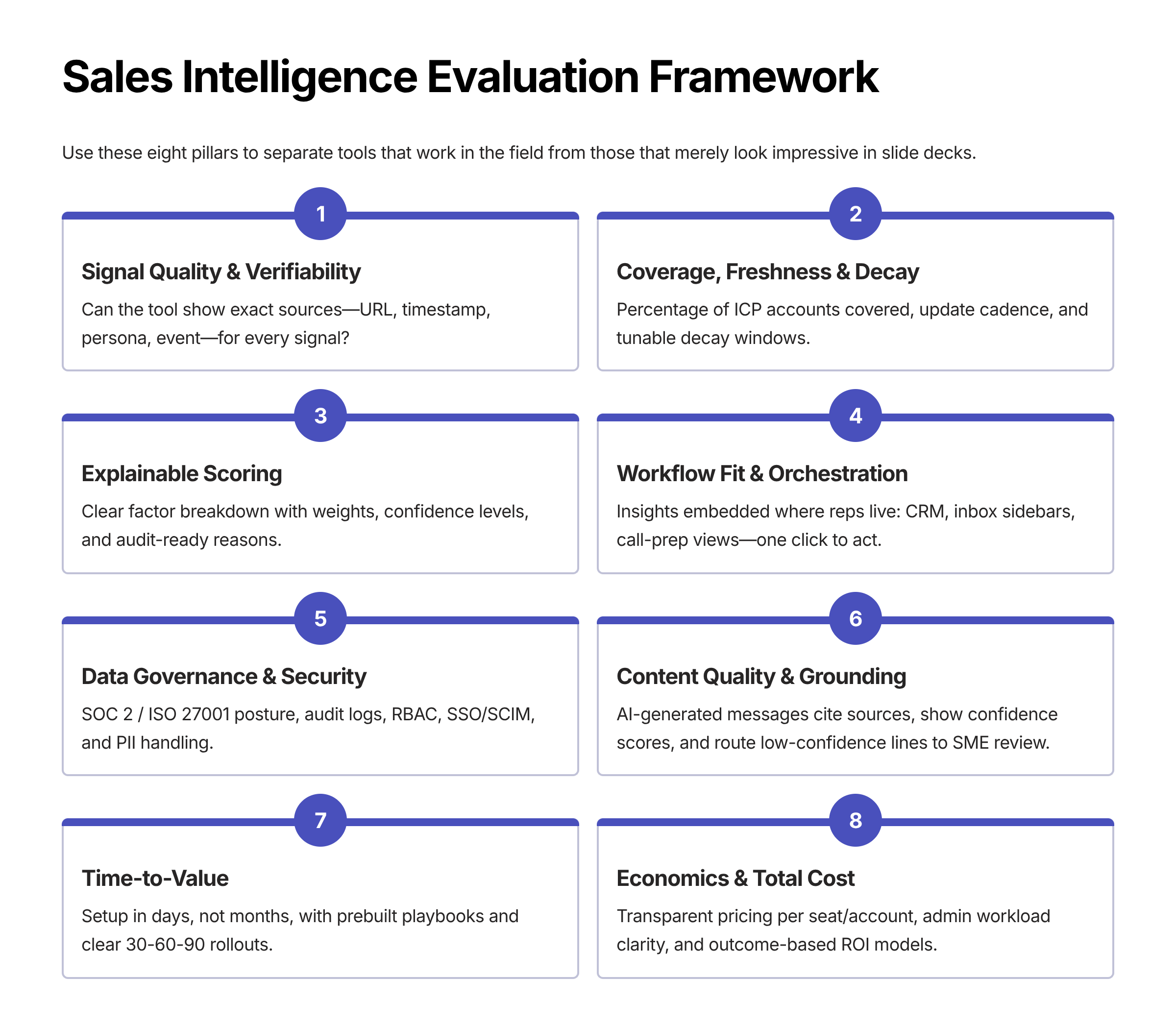

The “No-Hype” Evaluation Framework (use this in demos)

Here is a quick evaluation framework for sales intelligence that you could use to cut the hype and focus on your requirements:

1) Signal Quality & Verifiability

What to test

- Can the tool show the exact source of every signal (URL, timestamp, persona, event)?

- Does it separate buyer intent data from weak proxies (e.g., generic traffic spikes)?

- Are themes LLM-extracted and labeled (e.g., “SOC 2”, “pricing”, “Salesforce integration”)?

Demo prompts

- “Open 5 recent ‘in-market’ accounts. For each, show the pages viewed, content themes, and the evidence behind the prediction.”

- “Blacklist a noisy source in real time and recalc signals.”

Green flag: Click-through evidence, deduped identities, human-readable summaries.

Red flag: Vague ‘surge’ scores, no links back to sources, ‘trust us’ explanations.

2) Coverage, Freshness & Decay

What to test

- % of your ICP accounts covered; update cadence (daily vs weekly).

- Signal decay windows (e.g., intent fades after 7 days unless reconfirmed).

- Regional coverage and data gaps for your account-based marketing segments.

Demo prompts

- “Show coverage for our Tier 1 list. Where are the blind spots?”

- “Shorten the decay window for security-related interest and show how priorities change.”

Green flag: Transparent coverage reports, tunable decay, surge + recency interplay.

Red flag: Static weekly batches, no decay logic, no gap analysis.

3) Explainable Scoring (Fit + Intent + Behavior)

What to test

- Clear factor breakdown for predictive lead scoring (weights, recency, confidence).

- Ability for RevOps to adjust weights, add rules, and simulate outcomes.

- Per-score confidence level with reasons (e.g., low data volume).

Demo prompts

- “Open a hot account. Explain the score in plain English and show the top 5 drivers.”

- “Reduce ‘firmographic fit’ weight by 20% and re-score—what changes?”

Green flags: Factor sliders, sandbox simulations, and versioned rule sets.

Red flags: Black-box scores, no audit history, ‘AI decided’ answers.

4) Workflow Fit & Orchestration

What to test

- Insights embedded where reps live: CRM, inbox sidebars, call-prep views, SEP.

- One-click actions (add to sequence, book SME, share proof asset).

- Role-specific views for AEs, BDRs, CSMs, and managers.

Demo prompts

- “From this signal, add the buyer to a ‘pricing interest’ sequence—no tab-hopping.”

- “Open an AE opportunity and show the buying group + recommended next best action.”

Green flags: Inline panels, action buttons, and zero swivel-chairing.

Red flags: Standalone dashboards only, CSV exports, and manual copy/paste to execute.

5) Data Governance, Security & Compliance

What to test

- SOC 2 / ISO 27001 posture, data residency, PII handling, RBAC, SSO/SCIM.

- Audit logs for score changes, model updates, and user actions.

- Source allow/deny lists and content governance for regulated messaging.

Demo prompts

- “Show audit history for this score and who changed which weights, when.”

- “Demonstrate least-privilege access for a contractor user.”

Green flag: Fine-grained permissions, tamper-evident logs, export controls.

Red flag: All-or-nothing roles, no logs, unclear data lineage.

6) Content Quality & Grounding (for AI assistance)

What to test

- Are emails/call notes grounded in observable signals (not generic fluff)?

- Does the system cite sources and show a Confidence Score for generated content?

- Multi-persona variants (champion, legal, security, finance) with approved language.

Demo prompts

- “Draft a 120-word message grounded in buyer intent data from the last 48 hours; show citations.”

- “Lower the content confidence threshold and route low-confidence lines to SME review.”

Green flag: Short, signal-anchored messages and citations; automatic SME routes.

Red flags: Long generic prose, no source links, no citation discipline.

7) Time-to-Value & Change Management

What to test

- Setup in days (not months): connectors, mappings, baseline scoring.

- Prebuilt playbooks: competitive pressure, compliance interest, renewal risk.

- Measurable 30-60-90 rollouts with owners and KPIs.

Demo prompts

- “Outline a 90-day plan with named owners and weekly deliverables.”

- “Show a live dashboard for connect rate, meeting rate, stage velocity, win rate.”

Green flag: Clear owners, short TTV, and KPI dashboards out of the box.

Red flag: Long SOWs, vague milestones, ‘trust the process.’

8) Economics & Total Cost

What to test

- Pricing per seat/account/volume, overage behavior, and data vendor passthroughs.

- Admin workload (who maintains mappings, scoring, and governance?).

- ROI model tied to B2B sales outcomes (meetings, cycle time, win rate, ACV).

Demo prompts

- “Model ROI for 20 AEs and 10 BDRs: baseline vs. expected lift in 90 days.”

- “List all third-party data fees we’ll see on top of the license cost.”

Green flags: Transparent pricing, admin hours <3/wk, outcome-based ROI math.

Red flags: Hidden data fees, heavy admin burden, and vanity metrics.

Weighted Scorecard (You can use)

Pillar | Weight | Questions to Answer | Score (0–10) |

Signal Quality & Verifiability | 20% | Are signals specific, trusted, and inspectable? |

|

Coverage, Freshness & Decay | 15% | Will we see enough of our ICP fast enough? |

|

Explainable Scoring | 15% | Can RevOps tune and audit scores with reasons? |

|

Workflow Fit & Orchestration | 20% | Do reps act in-flow with one click? |

|

Governance, Security & Compliance | 15% | Are controls, logs, and access enterprise-grade? |

|

Content Grounding & Confidence | 10% | Are messages cited with a Confidence Score? |

|

Time-to-Value & Economics | 5% | Can we launch fast with clear ROI? |

|

Overall (out of 100): (Score × Weight) sum → Pass ≥ 80, Consider 70–79, Decline < 70.

RFP/RFI Questions You Can Reuse

- Signals: “List all signal sources with refresh cadence and sample evidence links.”

- Scoring: “Provide factor weights, decay logic, and an audit sample for a changed rule.”

- Orchestration: “Show native actions available from CRM record and email sidebar.”

- Security: “Describe RBAC, SSO/SCIM, data residency, PII handling, and export controls.”

- Compliance: “How do you prevent unapproved claims in AI-generated content?”

- Governance: “Do you support source allow/deny lists and content review queues?”

- TTV: “Detail a 90-day plan with milestones, roles, and success metrics.”

- ROI: “Share two customer-visible dashboards used to prove lift (definitions included).”

What “Great” Looks Like in a Live Demo

- You can trace every hot account back to concrete behaviors.

- Scores are explainable, adjustable, and versioned.

- Reps take action without tab-hopping.

- AI content shows citations and confidence, and low-confidence lines are routed.

- Security owners nod at the logs, RBAC, and residency story.

- A 90-day plan names owners, dates, and KPIs tied to pipeline velocity and win-rate lift.

Use this to separate AI-powered sales intelligence that works in the field from tools that merely look impressive in a slide deck.

Real Outcomes from AI Sales Intelligence

When it comes to real outcomes of having sales intelligence in your enterprise, here are a few of them that outshine the rest:

- +15–30% higher meeting show rates via intent-timed outreach

- –20–30% cycle time with buying-group detection and next-best-action

- +10–25% win rate by fusing fit + intent + persona-level personalization

- Cleaner forecast from objective, behavior-based evidence (not hope)

Track these six: connect rate, meeting rate, stage advance velocity, win rate, ACV lift, and pipeline coverage. Baseline for 30 days, then compare month-over-month. You can experience the real power of sales intelligence in your own team.

How AI-Powered Sales Intelligence actually works

Here is a detailed breakdown on how AI-powered sales intelligence actually works:

1) Data ingestion & identity resolution

- Sources: CRM (opps/activities), MAP, website/product analytics, call recordings, email/calendar, community/social, third-party buyer intent data (firmo/techno/consumption).

- Pipelines: ELT/CDC connectors stream events into a governed lake/warehouse.

- Identity graph: Deterministic + probabilistic matching (domains, hashes, titles) to unify account, contact, and buying-group entities.

- Normalization: Standard schemas (events, accounts, people), dedupe rules, field confidence.

2) Signal extraction & labeling

- Event classification (ML): Distinguish research vs. comparison vs. pricing actions.

- LLM topic tagging: Pull compliant themes from calls/emails/pages (e.g., “SOC 2,” “CPQ,” “Salesforce integration”).

- Sentiment & risk cues: Detect blockers (legal, security, procurement), champion energy, and objection patterns.

- Recency/decay: Time-weighted scoring so last 3–7 day behaviors matter more.

3) Feature store for modeling

- Account features: Firmographics, technographics, funnel position, and historic win signals.

- Behavioral features: Frequency, recency, diversity of touchpoints, and stakeholder roles.

- Context features: Competitor pages hit, security content consumed, product usage deltas.

- Governance: Versioned features with data lineage for RevOps audits.

4) Scoring & predictions (Fit + Intent + Behavior)

- Fit score: ICP similarity via gradient-boosted trees/embeddings (company size, geo, industry, stack).

- Intent score: External + first-party research intensity with decay windows and noise filters.

- Propensity / predictive lead scoring: Likelihood to create/advance/close this quarter; explainable factors and confidence.

- Churn/expansion: Usage signals + sentiment for CSM plays.

- Explainability: Top drivers, factor weights, and evidence links exposed to users—not a black box.

5) Buying-group detection & influence map

- Role inference: Titles + activity patterns → champion, mobilizer, blocker, approver.

- Coverage gaps: Highlight missing legal/IT/finance stakeholders early.

- Next step prompts: “Add security reviewer,” “Share ROI one-pager to finance.”

6) Next-Best-Action (NBA) engine

- Policy + ML hybrid: Business rules (compliance, territories) + model-driven suggestions.

- Action library: Add to sequence, book SME, attach proof asset, request warm intro.

- Guardrails: SLA checks, do-not-contact lists, and account-based marketing priority tiers.

7) Grounded content generation (LLM with citations)

- Inputs: Score drivers, topics, pages viewed, persona, stage.

- Outputs: 90–120 word one-to-few emails, call prep briefs, and micro-talk-tracks.

- Citations & Confidence Score: Inline references to signals (URLs/timestamps) + a visible confidence level; sub-threshold lines are routed to SME review.

- Style controls: Approved snippets, brand voice, and regulated claims filters.

8) Orchestration in the rep’s flow

- Surfaces: CRM record sidebar, email add-in, SEP dialer, call-prep view.

- One-click execution: No swivel-chairing—actions fire from where the rep is.

- Closed-loop logging: Every action/evidence written back to the CRM for reporting.

9) Security, privacy & compliance by design

- Controls: SOC 2/ISO posture, RBAC/SSO/SCIM, data residency, PII redaction.

- Content governance: Allow/deny source lists, approved claims, and audit logs on model/rule changes.

- Review workflows: Low-confidence or sensitive language → human gate.

10) Continuous learning & RevOps tuning

- Feedback capture: Wins/losses, email replies, meeting outcomes feed the models.

- A/B & decile analysis: Lift by score bands validates real impact in B2B sales segments.

- RevOps levers: Reweight fit vs. intent, adjust decay windows, refine ICP tiers, and blacklist noisy sources.

- KPIs to monitor: Meeting rate, stage velocity, win rate, forecast variance, ACV, and seller hours saved.

Quick example (end-to-end)

- Prospect hits “Pricing” + “Security” pages (3 events, 48 hrs).

- LLM tags SOC 2 + “budget approval”; intent score surges (decay = 7 days).

- Propensity model flags T-30 close probability ↑ with high confidence; missing stakeholder = Finance.

- NBA: “Share ROI calc to Finance, schedule Security SME.”

- LLM drafts email citing the pages viewed; Confidence Score = 88; CRM logs action.

- Outcome feeds back—models reweight what really moved the deal.

That’s how AI-powered sales intelligence tools cut through noise: unify data, extract buyer intent, predict what moves next, and orchestrate grounded actions—so RevOps gets evidence, and sellers get timing that wins.

Benefits of Sales Intelligence for Sales Teams

Sales intelligence is no more an option but a game-changing choice for the B2B sales teams. Here are a few reasons why it is beneficial, how it wins, a quick sample, and KPIs that can be set for the sales teams:

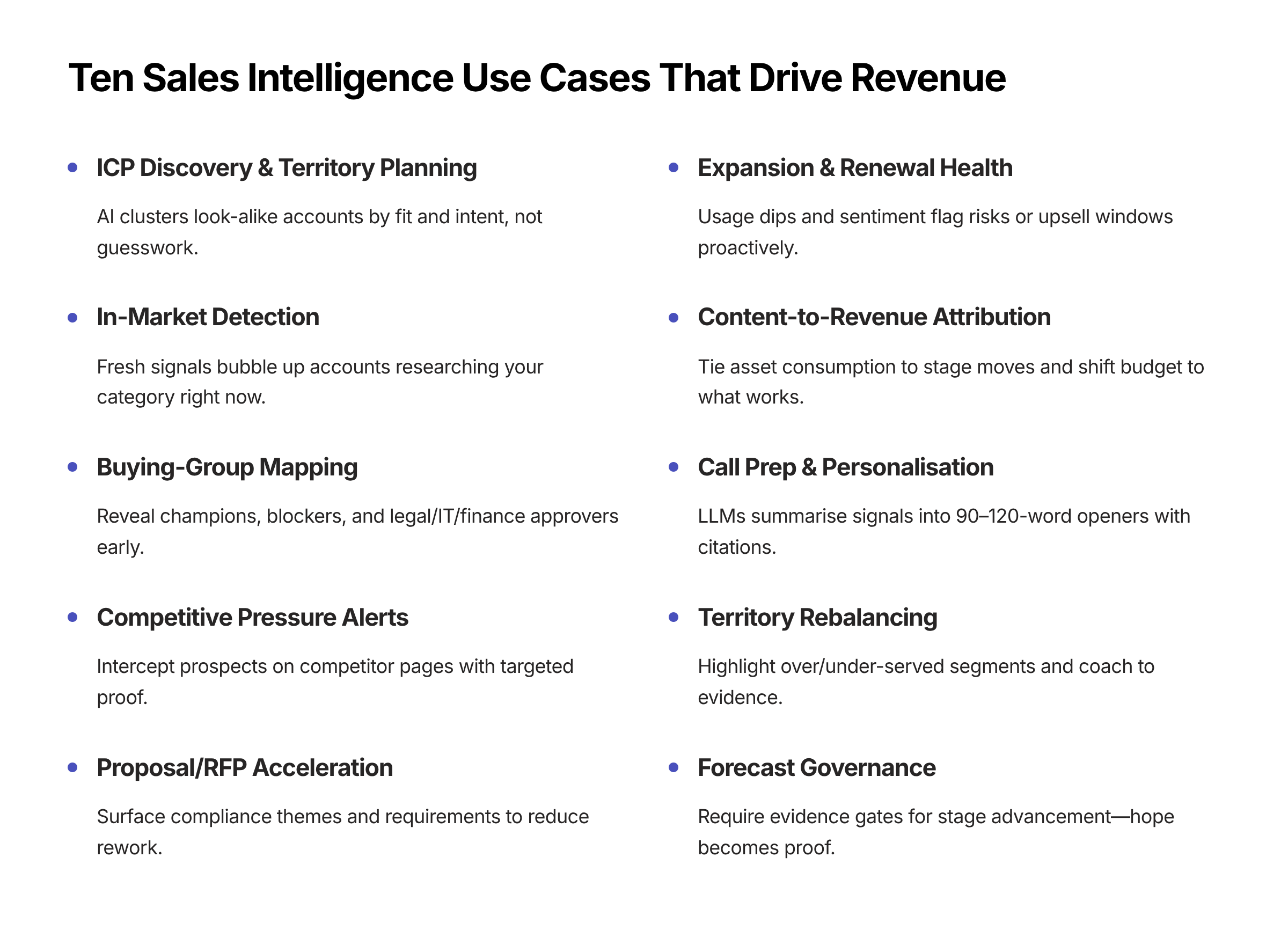

1) ICP discovery & territory planning

What happens: AI clusters look-alike accounts by industry, size, tech stack, and problem signals.

Why it wins: Reps start with fit and buyer intent data, not guesswork.

Quick play: Generate Tier-1 lists with recent “security/compliance/pricing” interest; assign by region and vertical.

KPI: Meetings booked per 100 accounts, Tier-1 coverage, connect rate lift.

2) In-market detection & prioritization

What happens: Fresh web + content + third-party signals bubble up accounts researching your category.

Why it wins: Predictive lead scoring focuses reps on timing, not volume.

Quick play: Auto-route “past 7-day” surges to BDR sequences with proof assets attached.

KPI: Meetings-held %, time-to-first-touch <60s, stage-advance velocity.

3) Buying-group mapping (multi-threading)

What happens: Titles + behavior reveal champions, blockers, and legal/IT/finance approvers.

Why it wins: You de-risk late-stage stalls by engaging the full committee early.

Quick play: If legal or security is missing by Stage 2, trigger a task to share validated security answers and book an SME.

KPI: Roles touched per deal, legal/security addressed pre-proposal, win rate.

4) Competitive pressure alerts

What happens: Signals show prospects on competitor pages or comparing features.

Why it wins: You intercept with targeted proof, not generic talk tracks.

Quick play: Auto-send a 1-to-few email citing the exact comparison topic and link a customer story.

KPI: Reply rate on competitive threads, competitive win rate, cycle time delta.

5) Proposal/RFP acceleration (enterprise cycles)

What happens: Intelligence surfaces compliance themes, pricing blockers, and must-have requirements.

Why it wins: Sales intelligence tools reduce rework and shorten time-to-proposal.

Quick play: Use SparrowGenie’s governed knowledge + Confidence Score to draft answers with citations; route low-confidence lines to SMEs.

KPI: Time-to-proposal, defect rate, shortlisted-to-won ratio.

6) Expansion & renewal health (CS/AM)

What happens: Usage dips + sentiment + new stakeholder activity flag risks or upsell windows.

Why it wins: Proactive saves and targeted expansions beat last-minute rescues.

Quick play: If product usage drops 20% and exec sponsor goes quiet, trigger an ROI recap + exec touch sequence.

KPI: Gross/Net retention, expansion ACV, save rate.

7) Content-to-revenue attribution (Marketing + Sales)

What happens: AI ties asset consumption (case studies, security pages) to meeting creation and stage moves.

Why it wins: Budget shifts to content that moves pipeline, not vanity metrics.

Quick play: Promote assets with highest stage-advance correlation inside sequences and call prep.

KPI: Meetings per asset, stage-advance after asset share, influenced revenue.

8) Call prep & personalization at “one-to-few”

What happens: LLMs summarize recent signals into a 90–120-word opener and 3-bullet talk track.

Why it wins: Faster prep, higher relevance, consistent quality.

Quick play: Require cited openers before dials; sub-threshold confidence routes to manager/SME review.

KPI: Connect→meeting conversion, talk-track adherence, email reply rate.

9) Territory rebalancing & rep coaching

What happens: Intelligence highlights over/under-served segments and playbook adoption.

Why it wins: Managers coach to evidence, not anecdotes.

Quick play: Shift low-signal accounts off overloaded reps; coach to NBA adoption and message grounding.

KPI: Meetings per rep, NBA adoption %, win rate by segment.

10) Forecast governance (RevOps)

What happens: Deals must meet evidence gates (decision-maker verified, meeting held, asset consumed).

Why it wins: Forecast moves from “hope” to evidence-backed probability.

Quick play: Requires a linked signal for any stage advancement at T-30; auto-flag slips with missing proof.

KPI: Forecast variance (T-30), evidence-backed pipeline %, slip reasons resolved.

5 Best AI Sales Intelligence Tools (by real-world use case)

These are positioned by signal type and go-to use case—so teams can pick the right engine, not just the loudest brand.

SparrowGenie — Sales Intelligence for RFP-, Proposal-, and Enterprise Cycles

Best for: Complex B2B sales where content, compliance, and buying-group alignment decide deals.

Why it works: Surfaces verified deal signals (security themes, compliance asks, pricing blockers), suggests next-best-actions, and drafts grounded responses with a Confidence Score—so sellers move fast without risking accuracy.

Standout signal: Proposal-grade intent (RFP/RFI cues + stakeholder sentiment) and governed knowledge—democratize knowledge with one single source of truth—across sales, pre-sales, and legal.

Clay — Data Enrichment & Programmatic Prospecting at Scale

Best for: Building hyper-relevant, persona-level lists with fresh web signals.

Why it works: Chains 100+ data providers with LLM prompts to find, enrich, and qualify accounts/contacts programmatically (e.g., reviews, hiring, socials).

Standout signal: Scraped, prompt-driven micro-indicators of pain (e.g., payroll complaints, “doesn’t take cards” reviews) that fuel surgical outreach.

Factors (Factor AI) — Account-Level Intent & Journey Analytics

Best for: Seeing which companies are actually researching your category and where they are in the journey.

Why it works: Tracks website/content engagement and external behaviors to surface in-market accounts, with explainable reasons and timing.

Salesloft — Sales Engagement with AI-Guided Timing & Messaging

Best for: Sequencing and coaching, shaped by engagement intelligence (best time to contact, message guidance).

Why it works: AI insights prioritize steps, lift reply rates, and reduce manual guesswork—especially when paired with intent signals upstream.

HubSpot (Sales Hub) — Pipeline, Analytics, and Operational Intelligence

Best for: Unified CRM + activity intelligence for SMB/mid-market teams.

Why it works: Built-in AI analytics clarify what’s working, where deals stall, and which behaviors correlate with revenue.

(Tip: Pair a data-rich prospector like Clay with a deal-cycle intelligence layer like SparrowGenie, then drive actions through your SEP/CRM. Fit + intent + orchestration beats any single “all-in-one.”)

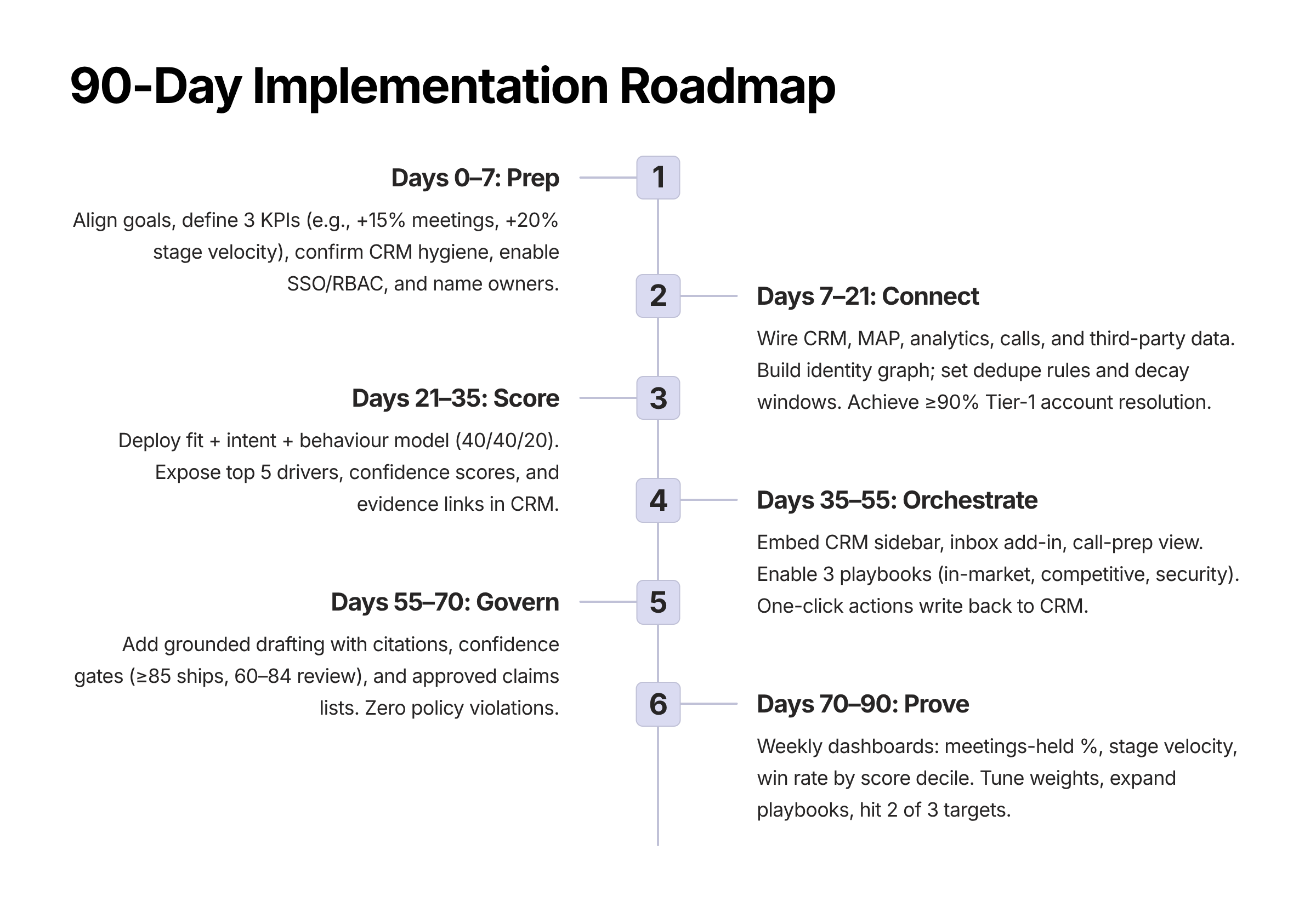

Implementation Checklist (RevOps-Friendly)

Phase 0 — Prep (Days 0–7)

Objectives: Align goals, lock scope, clear data hurdles.

Outcomes & KPIs (1 page)

- Pick 3 outcomes for 90 days: meetings-held %, stage velocity, win rate.

- Define baselines and targets (e.g., +15% meetings, +20% stage velocity).

Data Readiness

- Confirm CRM hygiene: required fields for account, contact, opportunity, primary persona.

- Inventory sources: CRM, MAP, website analytics, call recorder, email/calendar, product usage, third-party buyer intent data.

Access & Security

- Enable SSO/SCIM, RBAC roles (Admin, RevOps, AE, BDR, CSM, Legal).

- Document data residency, PII handling, and audit requirements.

Acceptance criteria: Baselines captured, data sources listed, security approved, owners named.

Phase 1 — Connect & Normalize (Days 7–21)

Objectives: Wire data, map identities, eliminate noise.

Connectors Live

- CRM (bidirectional), MAP (read), analytics (read), call/email (read), product usage (read).

Identity Resolution

- Domain → account mapping; email → contact; contact ↔ opportunity roles.

- Set dedupe rules: company name, domain, HQ location; tie-break by employee count or revenue.

Normalization & Governance

- Standard event schema: {who, role, what, where, when, weight, source}.

- Source allow/deny list v1 (block spam referrers, vague content farms).

- Decay windows (defaults you can tune):

- Pricing/Security pageviews: 7 days

- Comparison pages: 10 days

- Generic blog consumption: 14 days

Acceptance criteria: ≥90% of Tier-1 accounts resolved to a clean identity graph; connectors passing daily.

Phase 2 — Scoring & Signals (Days 21–35)

Objectives: Make scores explainable, signals trustworthy.

Fit + Intent + Behavior Model

- Fit (40%): firmographics/technographics vs ICP tiers.

- Intent (40%): third-party + first-party research intensity with decay.

- Behavior (20%): recency/frequency/diversity; stakeholder mix.

Explainability

- Surface top 5 drivers + evidence links (URL, timestamp, persona).

- Show Confidence Score (0–100) with thresholds:

- ≥85: auto-eligible for outreach

- 60–84: human review

- <60: enrichment or disqualify

Quality Gates

- Block accounts with <2 corroborating sources.

- Require persona alignment (e.g., Security interest must include IT/Sec role).

Acceptance criteria: Scores visible in CRM with factors, confidence, and linked evidence.

Phase 3 — Orchestration in the Flow (Days 35–55)

Objectives: Put intelligence where work happens—no swivel-chairing.

Surfaces

- CRM sidebar card: score + drivers + next-best-action (NBA).

- Inbox add-in: cited opener + one-click “Add to sequence”.

- Call-prep view: 3-bullet talk track grounded in signals.

Playbooks (enable 3 to start)

- In-market surge (7-day) → BDR one-to-few email + proof asset.

- Competitive comparison → AE counter-narrative + customer story.

- Security/compliance interest → Book SME; attach validated answers.

Guardrails

- Auto-route <60 confidence to SME/manager queue.

- Enforce do-not-contact and territory rules at action time.

Acceptance criteria: Reps can act in one click from CRM/inbox; actions written back to CRM.

Phase 4 — Content Grounding & Compliance (Days 55–70)

Objectives: Keep messages short, cited, and brand-safe.

Grounded Drafting

- 90–120-word emails citing what, where, when (e.g., “Visited /security yesterday”).

- Persona variants (Champion, Legal, Finance, IT).

Policy Controls

- Approved claims list; blocked phrases; region/legal variants.

- Confidence Score gate for AI-assisted text (ship ≥85; review 60–84).

SparrowGenie for enterprise cycles

- Use governed knowledge + citations for proposal/RFP answers.

- Measure time-to-proposal, defect rate, shortlisted→won improvements.

Acceptance criteria: >80% of outbound uses grounded templates; zero policy violations in spot checks.

Phase 5 — Measure, Tune, Scale (Days 70–90)

Objectives: Prove lift, tune thresholds, expand segments.

Dashboards (weekly)

- Creation: connects, meetings, meetings-held % (AI-sourced vs non).

- Progress: time-in-stage, NBA adoption %, multi-thread depth.

- Conversion: win rate by score decile; ACV by buying-group completeness.

- Forecast: T-30 variance; evidence-backed pipeline %.

Model Tuning

- Rebalance Fit vs Intent weights by segment (e.g., enterprise 45/35/20).

- Adjust decay windows; expand allow/deny lists.

Scale

Roll from 2 to 5 ICP segments; add CS renewal/expansion plays.

Acceptance criteria: Hit two of three 90-day targets; publish v2 scoring spec.

Quick RACI for Sales Teams (who owns what)

Workstream | RevOps | Sales Mgr | AEs/BDRs | Marketing | Security/Legal |

Connectors & Identity | R | C | I | C | C |

Scoring & Decay | R | C | I | C | C |

Playbooks & NBA | R | A | R | C | C |

Grounded Content | C | A | R | R | C/A |

Governance & RBAC | R | I | I | I | A |

Dashboards & KPIs | R | A | I | C | I |

R = Responsible, A = Accountable, C = Consulted, I = Informed

Default Config (start here, tune later)

Hot account threshold: Score ≥75 and Confidence ≥85 with ≥2 corroborating signals.

Stale signal cutoff: 7 days (pricing/security), 10 days (comparison), 14 days (generic).

NBA order: 1) Book time, 2) Share proof, 3) Add to sequence, 4) Internal intro.

Review SLA: SME queue responses <24h; legal/security <48h.

KPI Definitions (so reporting isn’t fuzzy)

Meetings-held % = Held meetings / Booked meetings (by source, last 30 days).

Stage velocity = Median days in stage (by segment).

Win rate = Closed-won / (Closed-won + Closed-lost).

Forecast variance (T-30) = |Predicted − Actual| / Quota.

Evidence-backed pipeline % = $$ with ≥1 linked, in-window signals.

NBA adoption % = Steps executed from inline actions / Suggested steps.

Change Management (make it stick)

Enablement: 45-min role-based training; 10-min weekly “signals huddle”.

Coaching: Managers review 3 deals/rep/week for signal use and NBA adherence.

Incentives: SPIFF for the first 30 days on meetings-held from AI-sourced accounts.

Communication: Bi-weekly update: wins, playbook of the week, config changes.

Risk & Mitigation

Noisy signals → low trust: tighten allow/deny lists; shorten decay; demand evidence links.

Adoption stalls: embed insights; remove dashboard-only flows; one-click actions.

Black-box scores: expose drivers and weights; publish scoring spec; hold Q&A.

Compliance worries: keep Confidence Score gates; SME/Legal review queues; SparrowGenie for governed content.

One-Week Starter Bundle (copy/paste)

- Turn on in-market (7-day) + competitive + security/compliance signals.

- Enable 3 playbooks (surge, competitor, security).

- Expose scores, drivers, Confidence Score in CRM sidebar.

- Ground email openers with citations; ship if ≥85 confidence.

- Dashboard: meetings-held %, time-in-stage, win rate (AI-sourced vs baseline).

Treat implementation like a RevOps product launch. Connect clean data, make scores explainable, embed actions in the rep’s flow, enforce confidence gates, and prove lift on a tight dashboard. If you’re running enterprise proposals or RFPs, layer SparrowGenie for secure, grounded responses—then scale what works.

Conclusion

AI-powered sales intelligence cuts through noise by finding in-market accounts, mapping buying groups, and recommending actions—with proof. Start narrow, embed insights where reps work, expose why scores are made, and govern the data. Tools like SparrowGenie give enterprise teams secure, explainable intelligence that speeds proposals and lifts win rates—without sacrificing accuracy.

Ready to see how AI can transform your RFP process?

Product Marketing Manager at SurveySparrow

A writer by heart, and a marketer by trade with a passion to excel! I strive by the motto "Something New, Everyday"

Frequently Asked Questions (FAQs)

Related Articles

Top 15 Sales Knowledge Management Tools in 2026 - Tested by Our Sales Teams

How Sales Proposal Software Improves Speed, Consistency, and Wins