AI for RFP Responses: How to Turn Your 10-Day Marathon Into a 2-Day Sprint

Article written by

Kate Williams

INSIDE THE ARTICLE

SHARE THIS ARTICLE

Summary

Most RFPs are 70% repetitive. AI handles them faster, better, and more consistently than humans—cutting response time from 10 days to 2, boosting win rates, and reducing team burnout. This blog breaks down the evolution of AI in RFPs, key benefits, real results, and a 60-day roadmap to modernize your RFP workflow.

Responding to RFPs shouldn’t hijack your whole week. But I know for a fact that, unfortunately, they always do—long PDFs, scattered content, last-minute reviews, and a portal that times out right when you hit upload. I have closely seen sales teams deal with them regularly.

But what if there is a better way?

AI is technically everywhere. But how can sales teams dealing with RFPs utilize AI to its fullest and make their daily job much easier? AI can read the doc, pull your approved answers, draft the sections you need, and package everything in the right format—fast and clean, making RFP response a seamless task. Let's dive into the details in this blog.

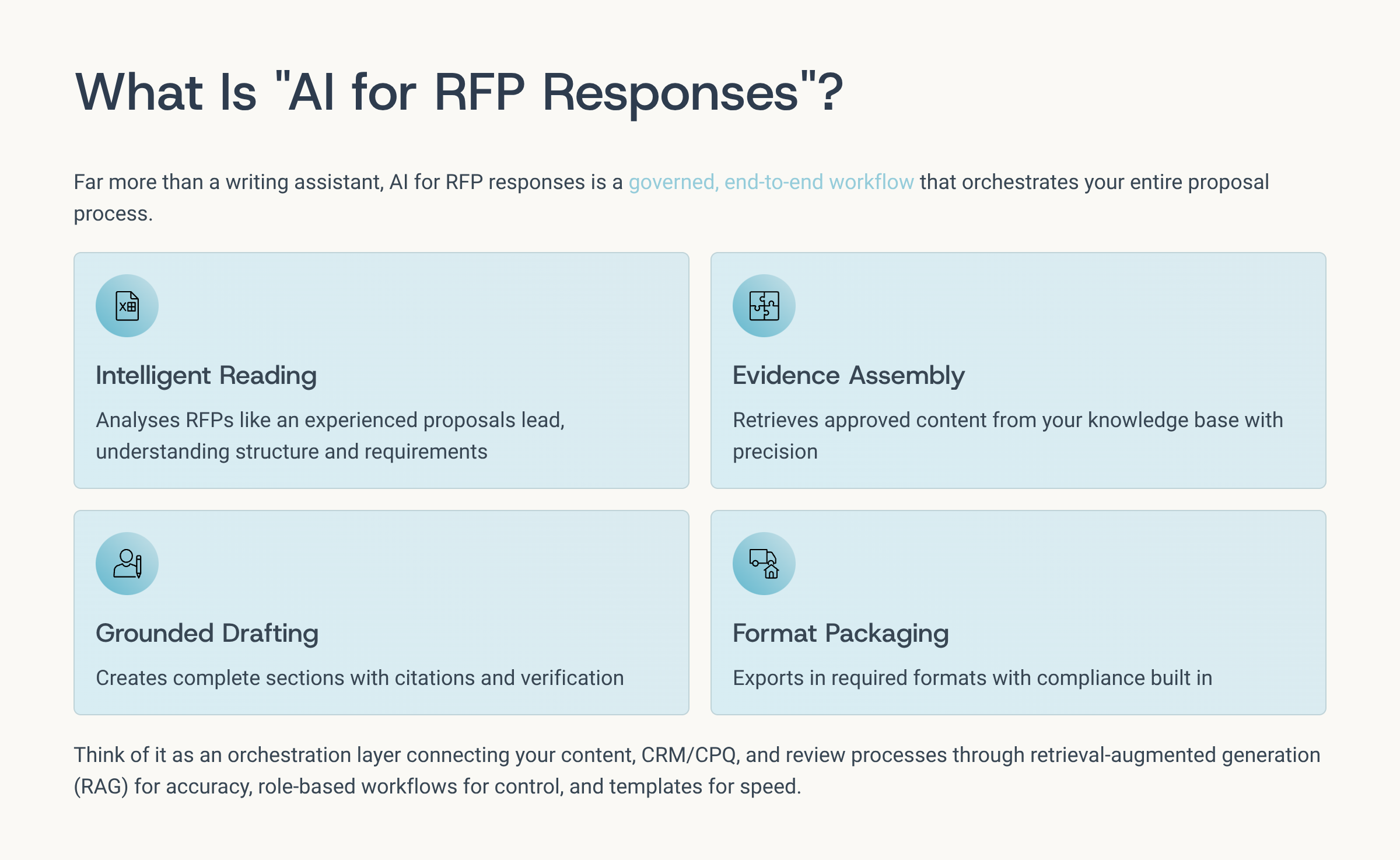

What is “AI for RFP responses”?

“AI for RFP responses” is not just a writing bot but a governed, end-to-end workflow that reads the RFP like a seasoned proposals lead, assembles the right evidence, drafts every section with citations, and packages the response in your required format. Think of it as an orchestration layer on top of your content, CRM/CPQ, and review process: retrieval-augmented generation (RAG) for accuracy, role-based workflows for control, and export templates for speed. Here’s how each stage works in practice:

1) Instantly analyzes the RFP and flags eligibility/risk

- Ingestion & structuring: The RFP (PDF, portal export, or DOCX) is normalized so headings, tables, and lists remain intact. The system extracts issuer details, scope, dates, required forms, and deliverables.

- Requirement mapping: Mandatory vs. rated requirements are separated. The engine highlights “must-haves” (certifications, durations, references, team composition) and red-flags any gaps that could disqualify you.

- Go/No-Go signal: A quick bid decision view rolls up risk, fit, timeline pressure, and estimated effort. Historical win/loss patterns can inform a win-probability indicator so you invest effort where it counts.

Why it matters: Avoids late surprises, eliminates manual skimming, and gets leadership aligned on whether to pursue—within minutes.

2) Retrieves and reuses your best past answers and documents

- Governed knowledge base: Prior responses, security language, SLAs, case studies, resumes, and legal clauses live in a curated, versioned library with owners, expiries, and approvals.

- RAG grounding: For each RFP requirement, the system retrieves approved blocks with metadata filters (product, region, industry, persona) and freshness checks before drafting.

- Evidence attachments: It links to real proofs—certs, SOC/ISO statements, insurance, references—so claims are backed by artifacts, not guesswork.

Why it matters: Reuse is accurate and compliant, not copy-paste roulette. Content stays consistent, on-brand, and audit-ready.

3) Drafts every required section (cover letter → pricing)

- Structured drafting: The AI composes a cover letter, executive summary, technical approach, implementation plan, staffing, and compliance statements—all grounded in retrieved sources with inline citations or footnotes.

- Pricing intelligence: Pulls day rates, past invoices, or CPQ data to propose a transparent pricing table with assumptions and options (e.g., base period + extension).

- Role-based reviews: Proposal managers, SMEs, security, and legal see section ownership, suggested edits, and status (Draft → SME Reviewed → Legal Approved) with an audit trail.

- Quality guardrails: A Confidence Score (accuracy, source reliability, and citation depth) flags low-confidence passages for human review.

Why it matters: Writers edit instead of reinventing. Reviewers focus on risk and nuance, not formatting and hunting for the latest boilerplate.

4) Exports a polished, compliant proposal—fast

- Output fidelity: Exports to the buyer’s requested format—DOCX, PDF, portal forms, or multi-file bundles—with required attachments embedded and naming conventions applied.

- Compliance packaging: Completeness checks verify every question, form, and appendix is answered; mandatory certifications and signatures are included.

- Version control & audit: Every change is tracked. You can reproduce “what was sent” with sources, approvals, and timestamps for audits or protests.

Why it matters: Zero last-mile chaos. You ship a clean, compliant, professionally formatted proposal on time—often days earlier.

What makes it “governed” (and enterprise-ready)?

- Access controls: Granular permissions by workspace, content type, and section.

- Lifecycle management: Owners, review cadences, and expiries keep content fresh.

- Policy enforcement: Required phrases, disclaimers, and brand rules applied at draft time.

- Traceability: Citations back to approved sources and complete review history.

- Integrations: Syncs with CRM/CPQ, storage (Drive/SharePoint), and e-signature/portals.

Outcomes you can expect

- Speed: Cut response time by ~50–70% with instant analysis and grounded drafting.

- Capacity: Handle 2–3× more RFPs without adding headcount.

- Quality: Higher scoring from consistency, complete answers, and accurate pricing.

- Compliance: Fewer disqualifications; audit-ready submissions with full traceability.

AI for RFP responses is a system of action for proposals: it decides quickly, writes accurately, proves claims with citations, and packages everything cleanly—so your team spends time winning the right bids, not wrestling with PDFs.

The Manual vs. AI RFP Response

RFPs used to feel like a relay race with too many handoffs and not enough runners. AI turns it into a coordinated playbook where work happens in parallel, decisions are evidence-based, and every section is grounded in approved content.

Snapshot Comparison of Manual vs. Automated RFP Response

Step | The old way (manual) | The AI way (governed & grounded) |

|---|---|---|

Intake & triage | Someone skims a 60–100-page PDF, drops notes in a doc, and pings teams on Slack/Email. Deadlines slip. | Upload once → instant brief: issuer, scope, due date, forms, must-haves, risks, and a go/no-go signal everyone can trust. |

Requirement mapping | Junior analyst builds a checklist in Sheets; misses rated vs mandatory nuances; rework later. | Structured extraction separates mandatory/rated items, flags disqualifiers, and links each requirement to an owner. |

Content sourcing | Hunt through drives, inboxes, “final_v7_really_final.docx.” Boilerplate is stale and inconsistent. | Retrieval-augmented generation (RAG) pulls only approved, fresh blocks (security, SLAs, case studies) with owners and expiries. |

Drafting | Blank page syndrome; copy-paste roulette; style and claims vary by writer. | Grounded drafts for every section (cover letter → pricing) with citations back to governed sources for traceability. |

Pricing | Guesswork from old spreadsheets; hidden assumptions; last-minute finance scramble. | Pricing tables seeded from CPQ/day rates/invoices with clear inclusions, options, and assumptions. |

Reviews | Email chains, conflicting edits, version chaos; legal and security looped too late. | Role-based workflows, section ownership, and audit trails; low-confidence passages auto-flagged for SME/legal review. |

Compliance | Manual checklists; missed forms/appendices; risky claims sneak in. | Automated completeness checks, policy enforcement (phrases, disclaimers), and attachment packaging by buyer format. |

Export & delivery | Formatting fire-drills; misnamed files; portal submissions at 11:59 PM. | One-click export to DOCX/PDF/portal bundles with naming conventions, signatures, and timestamps—well before deadline. |

Learning loop | Tribal knowledge, no feedback to the library; the same mistakes recur. | Post-mortems feed the KB; win/loss signals improve go/no-go, content quality, and pricing accuracy over time. |

What the old way feels like

- Linear & slow. Work waits for “the next person,” creating idle time and deadline risk.

- Search fatigue. Hours lost finding “the right” paragraph or the latest security statement.

- Inconsistency. Tone, claims, and numbers drift across sections and submissions.

- Compliance roulette. Mandatory requirements or forms get missed; disqualifications happen.

- No memory. Lessons learned don’t flow back into content; teams repeat avoidable work.

What the AI way enables

- Parallelization by design. Intake, mapping, drafting, and reviews progress simultaneously with clear owners and SLAs.

- Ground truth drafting. Every line is anchored to approved sources with citations, so edits start from “right,” not “rough.”

- Decision clarity. Early win-probability and risk flags keep effort focused on winnable bids.

- Audit-ready compliance. Required phrases, forms, and attachments are enforced before export.

- Compounding advantage. Each submission improves the knowledge base, increasing speed and win rates next time.

Impact (measurable deltas)

- Turnaround: Days → hours for first drafts; final export days earlier.

- Capacity: 2–3× more RFPs handled without new headcount.

- Quality: Fewer defects, tighter compliance, consistent voice.

- Cost: Less SME and legal thrash; more time for strategy and price shaping.

The old way is a system of record pushing documents around. The AI way is a system of action—it reads, reasons, drafts, proves, and packages—so your team shows up faster, cleaner, and more competitive on every bid.

What are Automated RFP Response Tools?

Think of AI for RFP responses as the brain and automated RFP tools as the body. AI reads and reasons—extracts requirements, grounds drafts with approved content (RAG), scores confidence, and flags risks. The tooling wraps that intelligence in workflows—owners, SLAs, audit trails—plus buyer-format exports, portal packaging, and compliance checks. Together, they turn a clever model into a reliable, repeatable submission machine.

What this looks like in practice: upload once → AI creates the brief and compliance matrix → automated tool assigns sections and deadlines → AI drafts with citations and pricing seeds → tool routes low-confidence lines to SMEs/legal → one-click DOCX/PDF/portal export with naming rules and attachments. Result? First drafts in hours, clean submissions days earlier, and a knowledge base that compounds wins over time.

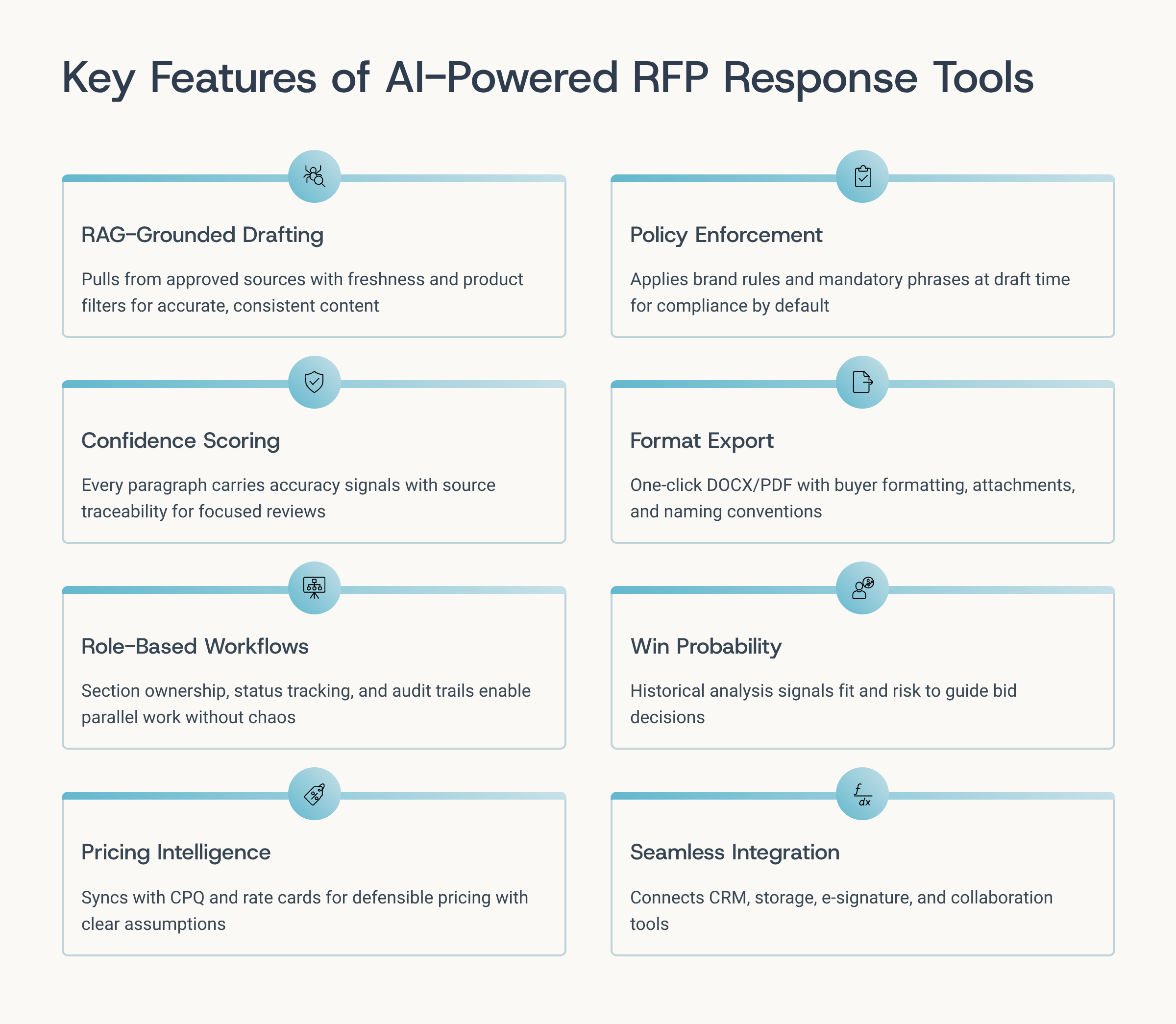

Key Capabilities to Look for in Automated RFP Response Tools

When you evaluate “AI for RFP responses,” don’t just look for a smart writer. You need a governed system that reads, reasons, drafts, proves, and packages—end to end. Here are the must-have capabilities, plus the practical “so what?” for each.

Instant intake & brief generation

Parses PDFs/DOCs/portals, preserves structure (headings/tables), and produces a one-page brief: issuer, scope, due date, forms, buyer format, and section map.

Why it matters: Aligns the team in minutes; stops the day-one scramble.

Requirement mapping & gap checks

Separates mandatory vs. rated criteria; builds a live compliance matrix; flags disqualifiers and content gaps early.

Why it matters: Prevents last-minute “we can’t bid” discoveries and missed checkboxes.

RAG-grounded drafting (not free-writing)

Retrieval-augmented generation pulls from your approved answer blocks, security language, SLAs, case studies, resumes, and policies—with freshness, region, and product filters.

Why it matters: Accurate, consistent copy from the start; fewer rewrites and legal escalations.

Confidence Score & traceability

Every paragraph carries a confidence signal (accuracy, source reliability, and citation depth) and links back to its sources.

Why it matters: Reviewers know where to focus and can audit claims in seconds.

Role-based workflows & section ownership

Clear owners for each section; statuses (Draft → SME → Legal → Final); comments, assignments, and SLAs with a full audit trail.

Why it matters: Parallel work without version chaos; accountability is built in.

Resume/CV & credential matcher

Parses team bios; auto-maps certifications, tenure, industry experience, and past contract values to RFP criteria; and surfaces gaps.

Why it matters: Increases scored points on rated sections; protects against eligibility misses.

Pricing intelligence & CPQ/day-rate sync

Seeds pricing tables from CPQ, historical invoices, or rate cards; adds assumptions, options (base + extensions), and taxes/fees if required.

Why it matters: Faster, defensible pricing with fewer finance fire drills.

Policy & brand enforcement

Applies mandated phrases, disclaimers, approved risk language, and brand style at draft time; blocks unapproved wording in sensitive sections (security, legal).

Why it matters: Compliance by default; consistent voice across submissions.

Buyer-format exports & portal packaging

One-click DOCX/PDF with styles, page numbering, and TOC; auto-built appendix bundles; optional portal form fill for common fields; strict file naming.

Why it matters: Zero last-mile thrash; you submit earlier and cleaner.

Completeness & red-flag checks

Automated “all questions answered” verification, attachment presence checks, signature blocks, and certification inclusions.

Why it matters: Eliminates silent disqualifiers and embarrassing omissions.

Win-probability & bid/no-bid assist

Signals fit and risk-based on your win/loss history, vertical, deal size, delivery model, and requirement match.

Why it matters: Invest time where you can win; say “no” faster when you can’t.

Content governance & freshness controls

Owners, review cadences, expiries, and change logs for every reusable block; side-by-side diffs and rollback.

Why it matters: Your library stays accurate, current, and audit-ready.

Security & data residency controls

Siloed workspaces, least-privilege access, PII masking, encryption, and regional data residency options; exportable audit logs.

Why it matters: Enterprise compliance and buyer trust, especially on public-sector bids.

Testing sandbox & hallucination guardrails

Built-in tests to validate answers against sources before publish; blocks unsupported claims in restricted sections.

Why it matters: Cuts risk from LLM creativity; speeds safe approvals.

Analytics & continuous improvement

Post-submission analytics (time to draft, review bottlenecks), answer performance, and content gap reports feeding back into the KB.

Why it matters: Each response gets faster and better—your advantage compounds.

Integrations that actually matter

CRM/CPQ for account/pricing, storage (Drive/SharePoint/Box), e-signature, IDP/SSO, ticketing (for SME requests), and Slack/Teams for notifications.

Why it matters: No swivel-chairing; data is consistent, and handoffs are automated.

Multi-doc & multi-stakeholder support

Handles RFP + SOW + compliance annexes + forms as one orchestrated package; supports internal/external reviewers.

Why it matters: Complex bids become manageable and traceable.

Template kits & style presets

Reusable skeletons for cover letter, exec summary, method, staffing, and pricing; buyer-specific styles (government vs. enterprise).

Why it matters: Consistent structure; new writers become productive on day one.

Prioritize platforms that combine governed content, RAG-grounded drafting, measurable confidence, and frictionless export. Those are the capabilities that turn “nice AI copy” into repeatable, compliant wins.

Benefits of Using an AI-powered RFP Response Platform

AI for RFP responses isn’t just “faster writing.” It compresses the entire proposal lifecycle—qualification → drafting → review → export—so you submit earlier, score higher, and bid more without burning out your team.

1) Turnaround time: days → hours

- What changes: Instant intake, auto-mapped requirements, and grounded drafts cut first-draft time from multi-day to same-day.

- Impact you’ll feel: Earlier submissions, more time for strategy, fewer 11:59 PM scrambles.

- Typical lift: 50–70% faster from intake to export.

2) Capacity without headcount

- What changes: Parallel workflows and reusable, approved blocks mean the same team can run more bids in flight.

- Impact you’ll feel: You can pursue stretch/opportunistic RFPs instead of skipping them.

- Typical lift: 2–3× more RFPs handled per quarter.

3) Higher win rates (quality + completeness)

- What changes: Every section is grounded in verified content, mapped to the scoring rubric, with gaps flagged early.

- Impact you’ll feel: Fewer zeroed sections, stronger rated scores, tighter alignment to buyer language.

- Typical lift: +5–15 pts on rated criteria; +20–30% relative win-rate increase on targeted segments.

4) Compliance by default

- What changes: Mandatory criteria, forms, signatures, and certifications are enforced before export; sensitive sections use pre-approved language.

- Impact you’ll feel: Fewer disqualifications, less legal/security back-and-forth, and audit-ready packages.

- Typical lift: 50–80% reduction in defects/omissions at final review.

5) Pricing accuracy and speed

- What changes: Pull day rates/CPQ/invoices to seed pricing tables with assumptions, options, and taxes—no last-minute spreadsheet archaeology.

- Impact you’ll feel: Faster approvals, fewer rework loops, clearer commercial positioning.

- Typical lift: Pricing assembly time down 60–75%; approval cycles compressed by 1–3 days.

6) SME and legal time back

- What changes: Confidence scoring and source citations route only low-confidence or novel content to experts.

- Impact you’ll feel: SMEs review what matters, not boilerplate; legal focuses on risk, not redlines on formatting.

- Typical lift: 30–50% reduction in SME/legal review hours per submission.

7) Forecast visibility and bid discipline

- What changes: Win-probability and requirement fit surface early; the pipeline shows real effort vs. likelihood to win.

- Impact you’ll feel: Cleaner forecast calls, faster “no-bid” decisions, and better utilization of scarce experts.

- Typical lift: 20–40% fewer zombie pursuits; higher concentration of effort on winnable deals.

8) Brand and message consistency

- What changes: Approved answer blocks, style presets, and policy enforcement keep tone, claims, and numbers consistent across submissions.

- Impact you’ll feel: Stronger buyer confidence; fewer contradictions between sections/proposals.

9) Knowledge compounding (each bid gets easier)

- What changes: Post-mortems and scored answers feed the knowledge base; expiries and owners keep content fresh.

- Impact you’ll feel: Reuse quality rises over time; new writers ramp fast; institutional memory is captured.

- Typical lift: Reuse coverage grows to 70–90% of recurring sections within a few cycles.

10) Lower total cost of proposal (TCOP)

- What changes: Less manual hunting, fewer rework loops, reduced context-switching and after-hours fire drills.

- Impact you’ll feel: Hard cost savings in hours plus soft savings in employee fatigue and attrition risk.

- Typical lift: 25–45% reduction in hours per RFP at steady state.

You submit sooner, bid more, and win more—while cutting risk and effort. That’s what “moves the needle” in an AI-enabled RFP practice.

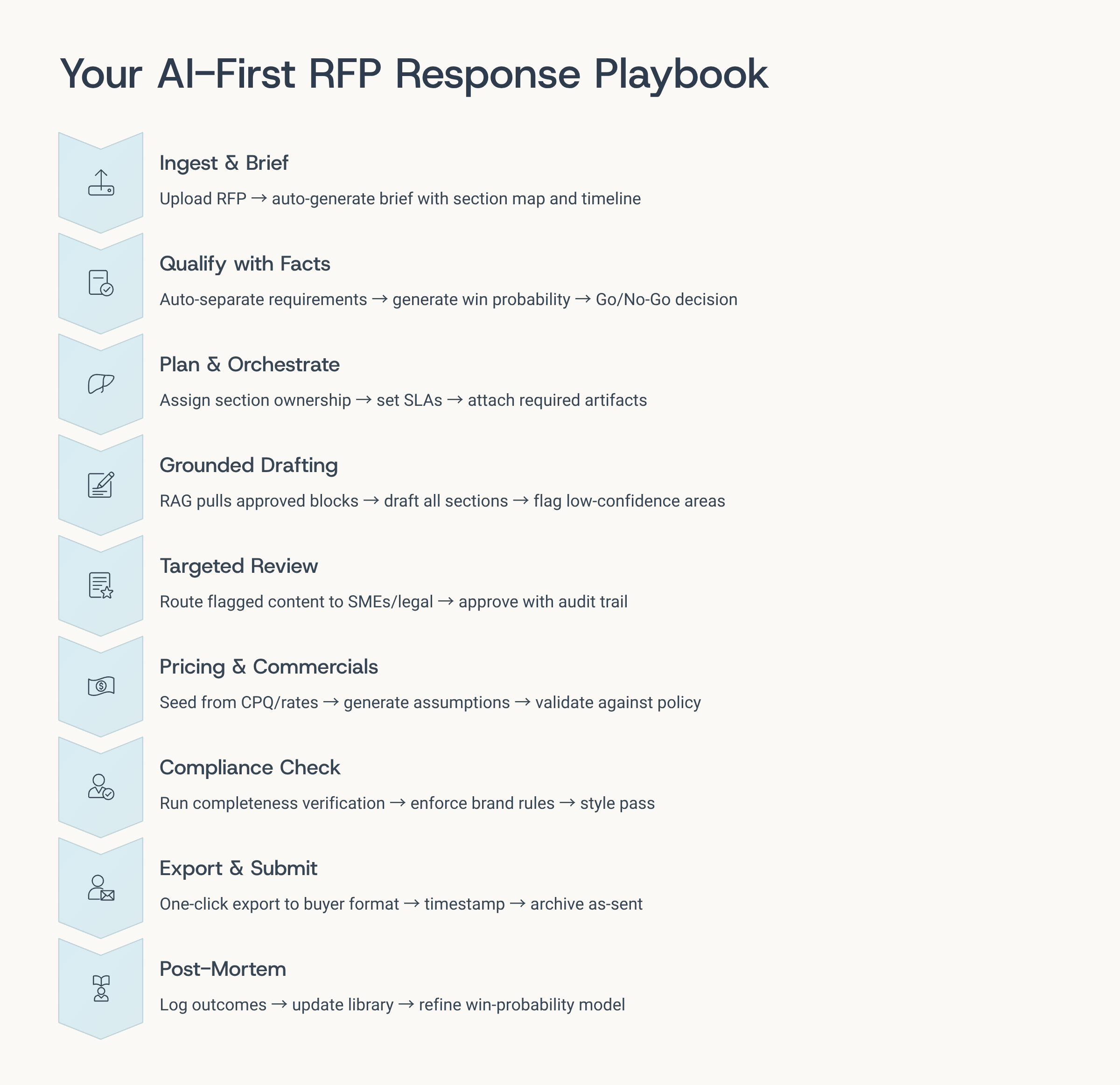

Suggested AI-first RFP flow (repeatable playbook)

Use this as your “same every time” operating model. Each stage has a clear objective, owners, outputs, and exit criteria so the team moves in parallel—not in circles.

1) Ingest & brief

Objective: Get everyone aligned in minutes.

Actions:

- Upload the RFP (PDF/DOCX/portal export). Auto-preserve structure (headings, tables).

- Generate an AI brief: issuer, scope, due date, submission format, forms, annexures.

- Create a living section map (what’s asked → where it will be answered).

Owners: Proposal lead (PL).

Outputs: 1-page brief, section map, timeline.

Exit criteria: Stakeholders acknowledge the brief; timeline accepted.

2) Qualify with facts (Go/No-Go)

Objective: Decide early if it’s worth it.

Actions:

- Auto-separate mandatory vs. rated requirements; run gap checks.

- Generate win probability from historical wins/losses and requirement fit.

- Flag blockers (certifications, references, past contract values, SLAs).

Owners: PL with Sales, Delivery, Legal/Sec.

Outputs: Go/No-Go memo with risks, effort estimate, and mitigation plan.

Exit criteria: Decision logged; if “Go,” budgeted hours and approvers assigned.

3) Plan & orchestrate

Objective: Turn the brief into owned work.

Actions:

- Assign section ownership (Exec Summary, Approach, Staffing, Security, Pricing).

- Set SLAs (Draft → SME → Legal → Final) and notification rules (Slack/Teams).

- Pre-attach must-have artifacts (certs, insurances, case studies).

Owners: PL; RevOps/PMO for capacity view.

Outputs: RACI, calendar, reviewers list.

Exit criteria: Everyone knows their section, due date, and reviewer.

4) Grounded drafting (no blank pages)

Objective: Produce a credible first draft fast.

Actions:

- Use RAG to pull approved blocks (security, SLAs, case studies, bios) by product/region/freshness.

- Draft cover letter → pricing notes with inline citations to sources.

- For resumes/CVs, auto-match credentials to RFP criteria; flag gaps.

Owners: Section owners; AI editor.

Outputs: Versioned Draft-1 across all sections; confidence scores.

Exit criteria: All sections drafted; low-confidence areas flagged.

5) SME & legal/security review (targeted)

Objective: Review where it matters, not everywhere.

Actions:

- Route only low-confidence or novel passages to SMEs/legal.

- Provide source links; require either approve, edit, or replace with an approved block.

- Capture rationale (why changed) to improve the library.

Owners: SMEs, Legal/Sec, PL.

Outputs: Draft-2 with approvals, redlines resolved, audit trail complete.

Exit criteria: Confidence scores acceptable; risk language approved.

6) Pricing & commercials

Objective: Fast, defensible pricing.

Actions:

- Seed pricing from CPQ/day-rates/invoices; include base + options/extensions.

- Auto-generate assumptions, inclusions/exclusions, and acceptance criteria.

- Validate taxes, T&Cs, liability caps against policy.

Owners: Finance/RevOps, Sales leadership.

Outputs: Pricing tables, commercial caveats, approval record.

Exit criteria: Commercials approved; numbers synchronized across the doc.

7) Compliance, completeness & style pass

Objective: Ship a clean, compliant package.

Actions:

- Run completeness checks: every question answered; forms, signatures, appendices present.

- Enforce brand/policy rules (mandated phrases, disclaimers, tone).

- Accessibility and formatting (styles, page numbers, TOC, cross-refs).

Owners: PL, Editorial QA.

Outputs: Release-candidate (RC) document(s).

Exit criteria: Zero blocking issues; compliance checklist signed.

8) Export & submission

Objective: Submit early with zero last-mile chaos.

Actions:

- One-click export to buyer format: DOCX/PDF/portal bundle; apply file naming conventions.

- Attach certifications and required forms; timestamp and archive “as-sent” set.

- If portal: prefill common fields; human verifies and submits.

Owners: PL; Bid Ops.

Outputs: Submitted package; proof of submission.

Exit criteria: Confirmation received; artifacts archived with hash/timestamp.

9) Post-mortem & knowledge uplift

Objective: Make the next one faster and better.

Actions:

- Log scored outcomes (wins/losses/shortlists), evaluator feedback, and content gaps.

- Promote edited passages back into the approved library with owners/expiries.

- Update win-probability model with latest signals.

Owners: PL, RevOps, Content Owners.

Outputs: Changelog, new/updated blocks, analytics (time to draft, review bottlenecks).

Exit criteria: Library refreshed; insights circulated.

Pro tips for scale

- Time-box Draft-1: 24–48 hours post-Go for most commercial RFPs; longer only for complex public-sector sets.

- Protect reviewers: Cap SME review time with confidence-based routing and section SLAs.

- Standardize skeletons: Keep buyer-specific templates (gov vs. enterprise) for instant structure.

- Measure the loop: Track % reuse, defect rate at final review, and hours per RFP—optimize monthly.

- Guardrails on by default: Block unsupported claims in security/legal sections to avoid rework.

This playbook turns AI into a system of action—decide fast, draft grounded, review with precision, and submit clean—while compounding knowledge so every RFP after this one is easier.

Quality & compliance: keep humans in the loop

LLMs can draft beautifully—but they can also over-generalize or invent (e.g., an impressive-sounding system count). Treat AI as a proposal accelerator, not an oracle—spot-check numbers, timelines, and claim-to-evidence mapping before submission.

Example Structure AI can Generate for You

Below is a buyer-ready outline AI can draft end-to-end. For each section, I’ve included what the AI typically auto-fills (grounded via RAG) and what humans usually review.

- Cover Letter

- AI auto-fills: Issuer name, RFP ID/date, value proposition, contact details, signature block.

- You review: Tone, key differentiators, exec sponsor name.

- Executive Summary

- AI auto-fills: Buyer goals restated in their language, proposed approach, outcomes, proof points, 3–5 headline benefits.

- You review: Strategic promises, measurable outcomes, competitive angle.

- Understanding of Requirements

- AI auto-fills: Plain-English translation of scope, deliverables, constraints, dependencies; maps to buyer’s section/paragraph numbers.

- You review: Any nuanced interpretations or out-of-scope clarifications.

- Compliance Matrix (Mandatory + Rated)

- AI auto-fills: Row-by-row requirement → response location → evidence/citation → status (Meets/Exceeds/Exception).

- You review: True compliance vs. “close enough,” exceptions, mitigations.

- Technical/Functional Approach

- AI auto-fills: Architecture or methodology, tools, integrations, data flows, environments; diagrams if allowed.

- You review: Tailoring to buyer stack, IP claims, depth vs. brevity.

- Implementation Plan & Timeline

- AI auto-fills: Phased plan (Initiate → Discover → Configure → Test → Deploy → Stabilize), RACI, milestones, Gantt-style timeline.

- You review: Dates, resource availability, critical path risks.

- Staffing & Qualifications

- AI auto-fills: Role descriptions, named CV snippets, certifications, years of experience, prior engagements; auto-match to RFP criteria.

- You review: Bench truth, substitutions, any required clearances.

- Security, Privacy & Compliance

- AI auto-fills: Data handling, encryption, access control, logging, incident response, data residency; SOC 2/ISO/PCI statements and URLs; DPA summary.

- You review: Buyer’s specific clauses, liability language, carve-outs.

- Service Levels (SLAs) & Support

- AI auto-fills: Availability targets, response/restoration times, escalation paths, maintenance windows, credits schedule.

- You review: Feasibility, financial exposure, alignment with standard terms.

- Risk Register & Mitigations

- AI auto-fills: Top 8–12 risks (timeline, scope, data migration, change control), likelihood/impact, mitigation owners.

- You review: Residual risk and acceptance.

- Training & Change Management

- AI auto-fills: Audience-based curriculum, training formats, adoption KPIs, comms plan, hypercare.

- You review: Buyer culture fit, localization needs.

- Quality Assurance & Testing

- AI auto-fills: Test plan (unit/integration/UAT), entry/exit criteria, defect triage, acceptance steps.

- You review: Tooling, test data constraints, performance benchmarks.

- Commercials: Pricing & Assumptions

- AI auto-fills: Line-item tables (labor, licenses, T&M vs. fixed), options/extensions, inclusions/exclusions, taxes, payment terms; pulls from CPQ/rate cards/invoices.

- You review: Margins, discounts, approval thresholds, cash-flow impact.

- Deliverables & Acceptance Criteria

- AI auto-fills: Per-deliverable description, format, due date, sign-off roles, acceptance tests.

- You review: Buyer-specific evidence of completion.

- References & Case Studies

- AI auto-fills: Closest-fit stories by industry/region/size, outcomes (metrics), contact masking, permissions.

- You review: NDA constraints, reference readiness.

- Governance & Reporting

- AI auto-fills: Cadences (weekly/steering), KPIs, templates for status/RAID logs, escalation path.

- You review: Named attendees, decision rights.

- Legal & Exceptions (if allowed)

- AI auto-fills: Redlines summary, standard positions on indemnity, IP, liability caps, data protection.

- You review: Deal strategy and fallback positions.

- Appendices & Required Forms

- AI auto-fills: Checklists of mandatory forms, certificates, insurance, resumes, financials; fills repeat fields; applies file naming conventions.

- You review: Originals vs. copies, signatures, dates.

Government vs. Enterprise variants (auto-applied presets)

- Government: Past contract values/durations, applicable statutes, accessibility (WCAG), FOIA sensitivity, data residency, small-business set-asides.

- Enterprise: InfoSec questionnaires (CAIQ/SIG-like), DPA/BAA references, SSO/SCIM details, integration runbooks, commercial T&Cs.

Portal submission mapping (if e-tender)

- AI auto-fills: Org profile fields, repetitive answers, attachment slots, question→answer cross-refs.

- You review: Final portal previews and any character/format limits.

Export-ready TOC (example)

- Cover Letter

- Executive Summary

- Understanding of Requirements

- Compliance Matrix

- Technical/Functional Approach

- Implementation Plan & Timeline

- Staffing & Qualifications

- Security, Privacy & Compliance

- SLAs & Support

- Risk Register & Mitigations

- Training & Change Management

- Quality Assurance & Testing

- Pricing & Commercial Assumptions

- Deliverables & Acceptance Criteria

- References & Case Studies

- Governance & Reporting

- Legal & Exceptions

- Appendices & Required Forms

How to use this: Treat it as your default skeleton. The AI fills each section with grounded content and citations; reviewers focus on risk, numbers, and nuance. Export to DOCX/PDF/portal—and you’re submission-ready.

Why SparrowGenie could be your perfect AI-first RFP Response Platform

SparrowGenie is secure, governed, and grounded. It drafts with retrieval-augmented generation from your approved answers, SLAs, security language, case studies, and resumes—then adds Confidence Scores so reviewers know exactly where to focus. Result? Accurate first drafts with citations, not free-written guesses.

Workflows are built for teams: section ownership, SLAs, and audit trails keep SMEs, legal, and sales in sync. A governed Knowledge Hub (owners, expiries, version history) prevents stale copy; resume/credential matching and requirement gap checks raise scoring and catch eligibility risks. Pricing intelligence seeds clean tables from CPQ/rate cards/invoices, with assumptions and options auto-added.

Submission stays calm: one-click DOCX/PDF/portal exports with naming rules, attachments, and completeness checks. Teams typically see 50–70% faster turnaround, 2–3× more RFPs handled, and higher win rates—all with an AI-secured, human-powered system of action that scales results, not stress.

Conclusion: From scramble to system

If your CRM is the system of record, AI for RFPs is the system of action. It reads the brief, maps the risk, drafts with receipts, prices with proof, and exports clean—so your team spends time winning, not wrangling. The payoff compounds: faster bids this quarter become a smarter library next quarter, which becomes higher win rates all year. Thus, turning your 10-day RFP response marathon into a 2-day sprint.

If you’re serious about bidding smarter—not just faster—SparrowGenie gives you a governed, AI-secured system of action: grounded drafts, targeted reviews, and clean exports. Fewer fire drills, more shortlisted wins. Ready to turn RFP chaos into a repeatable edge?

Ready to see how AI can transform your RFP process?

Product Marketing Manager at SurveySparrow

A writer by heart, and a marketer by trade with a passion to excel! I strive by the motto "Something New, Everyday"

Frequently Asked Questions (FAQs)

Related Articles

What Is Proposal Automation and Why It Matters

Automated RFP ROI Calculator for Enterprise Sales Teams